When I shifted to a MacBook as my primary computer I needed to install all kinds of new software and find all kinds of new tools. Looking into new tools for a new platform can be fun. One of the choices I made was running VirtualBox Sun xVM as the virtualization platform to allow me to run Linux and windows virtual machines on my Mac.

When I shifted to a MacBook as my primary computer I needed to install all kinds of new software and find all kinds of new tools. Looking into new tools for a new platform can be fun. One of the choices I made was running VirtualBox Sun xVM as the virtualization platform to allow me to run Linux and windows virtual machines on my Mac.I have been working now for some time with VirtualBox and I have to say, I love the product. However, one thing came to me as strange and unexpected. After giving it some thoughts it made a lot of sense. After using VirtualBox for some time and playing with the installation options I removed a couple of the VM's. After some time it came to my notion that my disk was becoming quite full.

!! When you remove a virtual machine you do NOT remove the disk image !!

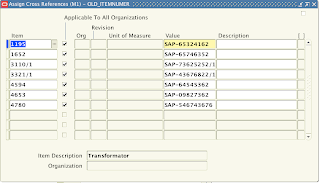

So when I looked in virtualbox vdi directory I noticed all the vdi files which are around 10 Gig in size still there. When you create a virtual machine you first create a virtual disk, then you install the operating system on this disk. The disk is represented as a vdi file which has the size of the disk. When you remove the VM you also have to remove the disk. Just something you have to know, so clean up after yourself and remove the disks. This can be done via the Virtual Disk Manager.

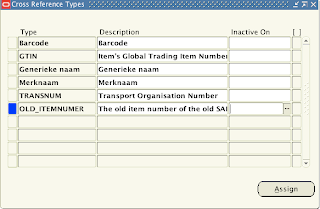

About VDI, VDI stands for Virtual Disk Image. This is the standard that VirtualBox uses. You can also use VMDK which is the format for disks from VMware or you can use VHD from Microsoft.