Oracle Fabric Interconnect employs virtualization to enable you to flexibly connect servers to networks and storage. It eliminates the physical storage and networking cards found in every server and replaces them with virtual network interface cards (vNICs) and virtual host bus adapters (vHBAs) that can be deployed on the fly. Applications and operating systems see these virtual resources exactly as they would see their physical counterparts. The result is an architecture that is much easier to manage, far more cost-effective, and fully open.

The personal view on the IT world of Johan Louwers, specially focusing on Oracle technology, Linux and UNIX technology, programming languages and all kinds of nice and cool things happening in the IT world.

Thursday, December 08, 2016

Oracle Fabric Interconnect - connecting FC storage appliances in HA

Oracle Fabric Interconnect employs virtualization to enable you to flexibly connect servers to networks and storage. It eliminates the physical storage and networking cards found in every server and replaces them with virtual network interface cards (vNICs) and virtual host bus adapters (vHBAs) that can be deployed on the fly. Applications and operating systems see these virtual resources exactly as they would see their physical counterparts. The result is an architecture that is much easier to manage, far more cost-effective, and fully open.

Tuesday, October 06, 2015

Oracle Linux - generate MAC address

Generating a new MAC address can be done in multiple ways, the below Python script is just one of the examples, however, it can be intergrated faitly easy into wider Python code or you can call it from a Bash script.

#!/usr/bin/python # macgen.py script to generate a MAC address for guests on Xen # import random # def randomMAC(): mac = [ 0x00, 0x16, 0x3e, random.randint(0x00, 0x7f), random.randint(0x00, 0xff), random.randint(0x00, 0xff) ] return ':'.join(map(lambda x: "%02x" % x, mac)) # print randomMAC()

This script will simply provide you a complete random MAC address.

Wednesday, March 12, 2014

Monitor your network connections on Linux

In those cases it is good to start monitoring which traffic if executed so you can investigate this and make a network connection diagram. For this you can use logging on network switches, routers and firewalls. However, a more easy way in my opinion is to ensure all your workstations do have a running copy of tcpspy on it which will start collecting data for some time and report this back to a central location.

tcpspy is a little program that will log all the connections the moment the connect or disconnect. By default tcpspy will install in a manner that it will automatically start as a daemon and write all information to /var/log/syslog in a manner that it will capture everything. You can however create certain rules to what tpcspy needs to capture by editing the file /etc/tcpspy.rules or by entering a new rule with the tcpspy -e options.

Before implementing a more strict local firewall rule on the workstations on my private home network I first had tcpspy running for a couple of weeks and extracted all information from /var/log/syslog to a central location and visualized it with a small implementation of D3.js to visualize this. This showed that a number of unexpected however valid network connections were made on a regular basis which I was unaware of.

Implementing this at your local home network is something that could be considered not that difficult, especially if you have some scripted way of implementing tooling on all workstations in an automated manner. Also it might look a bit overdone in a home environment, however, as this can be considered a testdrive for preparing a blueprint to be implemented in a more business like environment it shows the value of being able to quickly visualize all internal and external network traffic.

When you are looking into manners to log all internal and external network connections that are made by a server or workstation it might be a good move to give tcpspy a look and when you are looking into ways to visualize the data you receive you might be interested in the options provided by D3.js

Tuesday, December 18, 2012

Oracle database and Cisco Firewall considerations

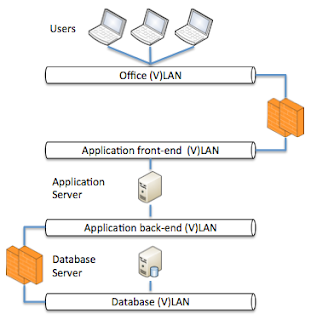

While it is good that everyone within an enterprise has a role and is dedicated to this it is also a big disadvantage in some cases. The below image is a representation of how many application administrators and DBA's will draw there application landscape (for a simple single database / single application server design).

In essence this is correct however in some cases to much simplified. A network engineer will draw a complete different picture, a UNIX engineer will draw another picture and a storage engineer another. Meaning you will have a couple of images. One thing however where a lot of disciplines will have to work together is security, the below picture is showing the same setup as in the image above however now with some vital parts added to it.

The important part that is added in the above picture is the fact that is shows the different network components like (V)LAN's and firewalls. In this image we excluded routers, switches and such. The reason that this is important is that not understanding the location of firewalls can seriously harm the performance of your application. For many, not network type of people, a firewall is just something you will pass your data through and it should not hinder you in any way. Firewall companies try to make this true however in some cases they do not succeed.

Even though having a firewall between your application server and database server is a good idea, when not configured correctly it can influence the speed of your application dramatically. This is specially the case when you have large sets of data returned by your database.

Reason for this is how a firewall appliance, in our example a Cisco PIX/ASA is handling the SQL traffic and how some default global inspection is implemented in firewalls which are based and do take note of an old SQL*Net implementation of Oracle. By default a Cisco PIX/ASA has implemented some default inspect rules which will do deep packet inspection of all communication that will flow via the firewall. As Oracle by default usage port 1521 the firewall by default has implemented this for all traffic going via port 1521 between the database and a client/applicatio-server.

Reason this is implemented dates back to the SQL*Net V1.x days and is still implemented in may firewalls due to the fact that they want to be compatible with old versions of SQL*Net. Within SQL*Net V1.x you basically had 2 sessions with the database server.

1) Control sessions

2) Data sessions

You would connect with your client to the database n port 1521 and this is referred to as the "control session". As soon as your connection was established a new process would spawn on your server who would handle the requests coming in. This new process received a new port to specifically communicate with you for this particular session. The issue with this was that the firewall in the middle would prevent the new process of communicating with you as this port was not open. For this reason the inspect rules on the firewall would read all the data on the control session and would look for a redirect message telling your client to communicate with the new process on the new port on the database server. As soon as this message was found by the firewall the firewall would open a temporarily port for communication between the database and your client. After that moment all data would flow via the data session.

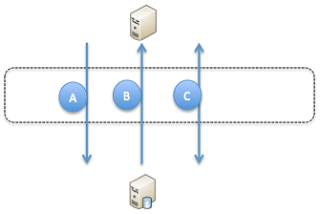

As you can see in the above image the flow is as follows:

A) Create sessions initiated by the client via the control session

B) Database server creates new process and sends a redirect message for the data session port

C) All data communication go's via the newly opened port on the firewall in the data session

This model works quite OK for a SQL*Net V1.x implementation however in SQL*Net 2.x Oracle has abandoned the implementation of a control session and a data session and has introduced a "direct hand-off" implementation. This means that no new port will be opened and the data sessions and the control session are one. Due to this all the data is flowing via the the control session where your firewall is listing for a redirect that will never come.

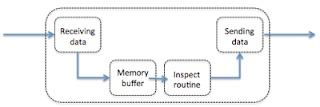

The design of the firewall is that all traffic on the control session will be received, placed in a memory buffer, processed by the inspect routine and then forwarded to the client. As the control session is not data intensive the memory buffer is very small and the processors handling the inspect are not very powerful.

As from SQL*Net V2.x all the data is send over the control sessions and not a specific data session this means that all your data, all your result sets and everything your database can return will be received by your firewall and will be placed in the memory buffer to be inspected. The buffer in general is something around 2K meaning it cannot hold much data and your communication will be slowed down by your firewall. In case you are using SQL*Net V2.x this is completely useless and there is no reason for having this inspect in place in your firewall. Best practice is to disable the inspect in your firewall as it has no use with the newest implementations of SQL*Net and will only slow things down.

You can check on your firewall what is currently turned on and what not as shown in the example below:

class-map inspection_default match default-inspection-traffic policy-map type inspect dns preset_dns_map parameters message-length maximum 512 policy-map global_policy class inspection_default inspect dns preset_dns_map inspect ftp inspect h323 h225 inspect h323 ras inspect rsh inspect rtsp inspect esmtp inspect sqlnet inspect skinny inspect sunrpc inspect xdmcp inspect sip inspect netbios inspect tftp service-policy global_policy global

Tuesday, November 20, 2012

Hadoop HBase localhost considerations

This message is somewhere in your logfile joining a complete java error stack, an example of the error stack can be found below and in fact is only a WARN level message however can mess things up quite a bid;

2012-11-20 09:29:08,473 WARN org.apache.hadoop.hbase.master.AssignmentManager: Failed assignment of -ROOT-,,0.70236052 to localhost,34908,1353400143063, trying to assign elsewhere instead; retry=0 org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed setting up proxy interface org.apache.hadoop.hbase.ipc.HRegionInterface to localhost/127.0.0.1:34908 after attempts=1 at org.apache.hadoop.hbase.ipc.HBaseRPC.handleConnectionException(HBaseRPC.java:291) at org.apache.hadoop.hbase.ipc.HBaseRPC.waitForProxy(HBaseRPC.java:259) at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1313) at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1269) at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1256) at org.apache.hadoop.hbase.master.ServerManager.getServerConnection(ServerManager.java:550) at org.apache.hadoop.hbase.master.ServerManager.sendRegionOpen(ServerManager.java:483) at org.apache.hadoop.hbase.master.AssignmentManager.assign(AssignmentManager.java:1640) at org.apache.hadoop.hbase.master.AssignmentManager.assign(AssignmentManager.java:1363) at org.apache.hadoop.hbase.master.AssignmentManager.assign(AssignmentManager.java:1338) at org.apache.hadoop.hbase.master.AssignmentManager.assign(AssignmentManager.java:1333) at org.apache.hadoop.hbase.master.AssignmentManager.assignRoot(AssignmentManager.java:2212) at org.apache.hadoop.hbase.master.HMaster.assignRootAndMeta(HMaster.java:632) at org.apache.hadoop.hbase.master.HMaster.finishInitialization(HMaster.java:529) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:344) at org.apache.hadoop.hbase.master.HMasterCommandLine$LocalHMaster.run(HMasterCommandLine.java:220) at java.lang.Thread.run(Thread.java:679) Caused by: java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:592) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:489) at org.apache.hadoop.hbase.ipc.HBaseClient$Connection.setupConnection(HBaseClient.java:416) at org.apache.hadoop.hbase.ipc.HBaseClient$Connection.setupIOstreams(HBaseClient.java:462) at org.apache.hadoop.hbase.ipc.HBaseClient.getConnection(HBaseClient.java:1150) at org.apache.hadoop.hbase.ipc.HBaseClient.call(HBaseClient.java:1000) at org.apache.hadoop.hbase.ipc.WritableRpcEngine$Invoker.invoke(WritableRpcEngine.java:150) at $Proxy12.getProtocolVersion(Unknown Source) at org.apache.hadoop.hbase.ipc.WritableRpcEngine.getProxy(WritableRpcEngine.java:183) at org.apache.hadoop.hbase.ipc.HBaseRPC.getProxy(HBaseRPC.java:335) at org.apache.hadoop.hbase.ipc.HBaseRPC.getProxy(HBaseRPC.java:312) at org.apache.hadoop.hbase.ipc.HBaseRPC.getProxy(HBaseRPC.java:364) at org.apache.hadoop.hbase.ipc.HBaseRPC.waitForProxy(HBaseRPC.java:236) ... 15 more 2012-11-20 09:29:08,476 WARN org.apache.hadoop.hbase.master.AssignmentManager: Unable to find a viable location to assign region -ROOT-,,0.70236052

The root cause of this issue resides in your /etc/hosts file. If you check your /etc/hosts file you will find a entry something like the one below (in my case mu machine is named APEX1)

127.0.0.1 localhost 127.0.1.1 apex1 # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allroutersThe root cause is that apex1 resolves to 127.0.1.1 which is incorrect as it should resolve to 127.0.0.1 (or a external IP). As my external IP is 192.168.58.10 I created the following /etc/hosts configuration;

127.0.0.1 localhost 192.168.58.10 apex1 # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allroutersThis will ensure that the resolving of your host processes on your localhost will be done correctly and you can start your HBase installation correctly on your development system.

Monday, January 16, 2012

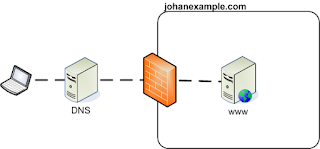

DNS BIND load-balance setup

Now I do think that a lot of people like to see this page and for this reason I have build a cluster of webservers all ready to provide the content of the website www.johanexample.com to the users who want to see it. I have in total 4 webservers up and running and I named them node0, node1, node2 and node3 and the have the IP addresses 192.0.2.20, 192.0.2.21, 192.0.2.22 and 291.0.2.23

zone "johanexample.com" {

type master;

file "/etc/bind/zones/johanexample.com";

};

; johanexample.com

$TTL 604800

@ IN SOA ns1.johanexample.com. root.johanexample.com. (

2012011501 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

604800); Negative Cache TTL

;

@ IN NS ns1

IN MX 10 mail

IN A 192.168.1.109

ns1 IN A 192.168.1.109

mail IN A 192.0.2.128

www IN A 192.0.2.20

IN A 192.0.2.21

IN A 192.0.2.22

IN A 192.0.2.23

node0 IN A 192.0.2.20

node1 IN A 192.0.2.21

node2 IN A 192.0.2.22

node3 IN A 192.0.2.23

www IN A 192.0.2.20 IN A 192.0.2.21 IN A 192.0.2.22 IN A 192.0.2.23

I have also created records in the zone file for all the specific nodes so I can acces them by making use of node0.johanexample.com, node1.johanexample.com, etc etc. This is however not needed. You can do without however it can be very handy from time to time. When you do play arround with your zone files and do try some things it is good to know that next to the more known utility named-checkconf you also have a utility to check your zone file: named-checkzone. In this example the way to use it would be:

root@debian-bind:/# named-checkzone johanexample.com /etc/bind/zones/johanexample.com zone johanexample.com/IN: loaded serial 2012011501 OK root@debian-bind:/#

When you zone file is correct configured and you have configured multiple IP's to one name you should be able to see the loadbalancing happening when you for example do a nslookup command like in the example below:

root@debian-bind:/# nslookup www.johanexample.com Server: 192.168.1.109 Address: 192.168.1.109#53 Name: www.johanexample.com Address: 192.0.2.21 Name: www.johanexample.com Address: 192.0.2.22 Name: www.johanexample.com Address: 192.0.2.23 Name: www.johanexample.com Address: 192.0.2.20 root@debian-bind:/#

A second check could be to do multiple ping commands and you will see the target IP change:

root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.23) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.22) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.21) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.20) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.23) 56(84) bytes of data.

Friday, July 23, 2010

Subversion TCP4 and TCP6

I recently received the request to setup a new source repository for an international team located in India and Europe who will share code during development cycles. The main development will be done on Oracle PL/SQL code and due to the fact that TOAD has support to connect to Subversion and Subversion is know to most developers the decision was made to setup a subversion machine.

I recently received the request to setup a new source repository for an international team located in India and Europe who will share code during development cycles. The main development will be done on Oracle PL/SQL code and due to the fact that TOAD has support to connect to Subversion and Subversion is know to most developers the decision was made to setup a subversion machine. Even though I installed and used subversion systems before I now encountered something new. After installing subversion and starting svnserve I was unable to connect. checking if the daemon was running and checking the config it turned out all to be correctly running. Finnaly it leaded us to the networking part of the server. And even then it took me a second look before spotting the issue.

running a netstat -l grep svn command gave me the following line:

tcp6 0 0 [::]:svn [::]:* LISTEN

The second time I looked at the result it turned out that the subversion server was listing under the IPv6 protocol instead of the exepected IPv4.

When yous start svnserve you start it normally with svnserve -d to get it running in a deamon mode. It turns out that, at least under debian, it will pick the IPv6 protocol instead of the IPv4. you can solve this starting the deamon with the following command instead:

svnserve -d --listen-host=0.0.0.0

If you check your netstat info after that you will see the following:

tcp 0 0 *:svn *:* LISTEN

Now it was running on IPv4 and all my network routing was working and I was not blocked somewhere along the route.

Friday, August 21, 2009

Basic network security for Oracle Developers

I just recently came back from a 4 day hackers convention in the Netherlands, har2009, and have been talking to a lot of people. One of the things that came up during some of the discussions was security and the time a vendor needs to patch some things. In most cases a exploit is found and used in the field before the vendor (for example Oracle or a firewall vendor) is aware of it. After it is made aware of the fact that a security issue is available it will take some time to fix it and make sure a patch is available. After the patch is available administrators still need to apply the patch.

So even do vendors are working on making the systems as secure as possible and developers who are developing on those platforms are trying to develop the code as secure as possible you will still see that security breaches will happen. You can simply not find all the errors in the code even if you test, test, retest and retest you will still have bugs in your code and ways the code can be used you never ever have been thinking about.

So simply to say, it is almost impossible to create a system that is a hundred percent secure. So if you as an oracle developer develop a system, think about it as an architect or are responsible as a project manager you will have to know at least some of the basics of security. I will not go into all kinds of coding examples to make your code as secure as possible I will however provide some best practices on mainly network security and architecture.

As an example I will use the below image I found in a blogpost by Steven Chan on “Loopbacks, Virtual IPs and the E-Business Suite”. Now for the this document we are not going into the fact this is a Oracle E-Business Suite setup we just take it for granted that web nodes that are used are just simple web-nodes and we are unsure of what they do, it is not really important for the examples as you should harden en secure every system as much as possible without looking at what the system is doing.

This statement can create already some questions, why should I harden a system which is not holding any mission critical information, is “normal” security not enough? Simply put, no. why not, if you “lower” security on non mission critical systems you have a weak link in your security. Possibly an attacker can compromise this system and use it as a foothold and starting point for future attacks. You should protect every system as was it the most important system in your entire company. Never compromise on security for whatever reason. Budget, time, deadlines, policies not in place,… none of this can ever be a excuse to “lower” security on a system.

In the above picture we see the network as shown in the blogpost of Steven Chan. In basics nothing is wrong with this approach and the network diagram is not intended to display security it is used to explain another issue. We will however use it as the basis of explaining some things. When reading the rest of this article the question might arise that you as a developer will not have the “power” to request all the things I will state. However, in my opinion you should mention them and ask about them when developing a system because you simply would like to have the most secure and stable system possible.

External firewalls:

With external firewalls I mean non local firewalls, so all firewalls which are in place somewhere in the network. I will make a point on internal firewalls later is this article where I will be talking on the subject of firewalls on your Oracle server. As we can see in the network drawing we have 3 firewalls. A firewall on the outside of your network, a firewall between DMZ0 and DMZ1 and one between DMZ1 and DMZ2. DMZ0 holds the reverse proxy, DMZ1 holds a loadbalancer and 2 web nodes. DMZ2 is not really a demilitarized zone because it also holds clients in it as the internal users. Possibly the network drawing is showing another firewall which protects your database server.

However the setup looks to be valid and is protecting you with a layer of firewalls from the external network (the internet in this case) it not complete. You should have another firewall in place to make this more secure. You should place a firewall between the internal users and the rest of DMZ2 which contains web-nodes and a load balancer.

Reasons for this, (A) even do most companies try to trust the users you can never be sure. It is even so that a large portion of the hacking attempts is done from within the company by for example disgruntled employees. (B) The users will most likely have access to internet and so can be compromised by malware and rootkits which could potentially become a gateway into your company. So you cannot trust your users (or the workstations they are using) and you should at least have a firewall between your servers and your clients. It is even advisable to secure your servers from your internal users in the same way as you would do to protect them from the internet.

Internal firewalls:

So as we stated in the firewall section it is great to have firewalls in place to make sure intruders cannot enter your DMZ without any hurdle however when thinking about security and your DMZ you have to keep one thing in mind. What happens if a intruder gets access to your DMZ, what happens when an intruder has compromised a server in your DMZ without you knowing. With the setup as it stands now all other servers are now wide open.

To prevent this it is advisable to have internal firewalls on your servers to harden them for attacks from within the DMZ. So you will have secure islands within the secure DMZ sector. This will make it harder for an attacker to take over multiple servers before being spotted. You can use for example iptables to harden your servers with internal firewalls. The setup of iptables rules can be a little hard if you do this for the first time and even if you have a long history of working with iptables it can be hard to maintain all local iptables settings when you have a large amount of servers. For this it can be handy to use tools like fwbuilder or kmyfirewall.

When setup an internal firewall you can make sure only the ports are open per interface that are needed for a minimum service on this network segment. I will explain more about network segmentation in combination with internal firewalls in the next topic.

Network Segmentation:

As stated in the previous topic of internal firewalls one has to minimize the open ports and services per network segment so only the ports are open that are needed for this segment. With network segmentation you will make use of all network interfaces in your server to create multiple networks. For example you will have a customer network, a data network and a maintenance network.

Users for example will only use the customer network as we know the servers are webnodes so we will only have to use TCP port 443 for HTTPS traffic over TLS/SSL. Users will most likely have no need to access anything else so no need to provide them the option to connect to those ports.

The data network can be a separate network running over a different NIC which can be used to connect to the database from a web-node or connect storage appliances. This will most likely only be used for server to server connections so most likely no real-life users have a need to access. So people who are working on the customer network will not be able to connect to ports on the data network. First because of they are not on the same physical network and even if you have some vlan configuration mistake or something like that you will have your iptables firewall which will restrict them based upon the source IP address. On this network you do NOT have to open for example port 443 or a port for for example SSH or telnet.

The maintenance network will be used by administrators and on this network you can close for example direct access to network storage appliances, web applications and such. You have to open ports like SSH on this network so administrators can access the servers by secure shell and do their work. This network will be the most wanted by attackers because it will have ports open that can be used and exploited to gain access to the console of the severs.

So by physically have some network segmentation you can separate services and groups of users based upon their role. Are they users or are they administrators of the systems, based upon this you can provide them access to a certain network segment with its own routers, switches and entire network topology.

Encryption:

We have talked about firewalls on the outside and in the inside, we have discussed separating networks. However, even if you have done all this you cannot feel secure. When thinking about security you always have to consider the network compromised. So if you consider the network compromised someone can sniff all the network traffic by using a network sniffer. So you should state, very strongly, that you can only use encrypted network connections.

This means, never use telnet and only use SSH, Never use FTP use SFTP or SCP. Never use HTTP use HTTPS instead. Use encrypted SMTP when you are sending out mails. So you can make your network even more secure by making only use of encrypted connections. By using only encrypted connections a possible attacker who is using a package analyzer on your network traffic cannot sniff cleartext passwords.

You should restrict users from using open services like telnet, ftp and such by simply closing the ports with iptables.

So coding a custom application can be a little harder when using a secure and encrypted connection however it will not be so much more work and the benefits are huge. As this post is intended for Oracle developers it might be good to have a look at the Oracle Application Server Administrator’s Guide, Overview of Secure Socket Layer SSL In Oracle Application Server. So huge that if you implement it correctly a possible attacker will only be able to see scrambled data and not a single useful packet of information.

Patching policy:

So we have setup external and internal firewalls, encrypted the network traffic and separated the network into parts, can we feel save? No, not really. Even do we have taken all those steps we have to take into consideration that we still have to open some ports and very open port is a possible point of entry for a attacker. If you are for example running a web node you will have to run a webserver and as this post is intended for Oracle developers you will most likely be running a Oracle Application server which uses Apache. Even do Apache is a really good and secure webserver still it has its security issues. So if the vendor, Oracle, releases a patch you need to give this a very good look and I advise to apply it after testing it and reading the specs a couple of times.

The patch is released for a reason, this reason is solving bugs. Not always security related bugs however they solve some issues. As you will see most exploits are up and running for old versions and systems who are not being patched are getting more and more vulnerable for a attack every time they miss a patch. It can be that your server can be compromised or services are disturbed, in all cases it is not desirable. So again, my advice, whenever a patch is released, give it a good look and if it is not causing a major problem you will have to apply it as soon as possible.

If a patch is giving a real problem with your custom code for example you should not simply decide to not apply the patch. You should solve the problem which is holding you back from applying the patch and after that apply the new code and the patch on the systems. If you decide to not apply a patch you can have the situation that some time later a critical patch is released and you still have to apply the first patch as it is a prerequisite for the second patch. Then you still have to do the code fixing. Or you can decide also not to apply the second patch and…… you are simply making your system more and more insecure by every patch you miss.

Securing code:

This post is about network security and no so much about code security. However, you vendor (oracle) will provide you security patches, on your own code you (and your team) will be the only one who will be able to release security patches so it is important to not only test the standard things in your code. Also have a look at for example SQL injection, buffer overflows, incorrect error handling…. Etc ect.

Password policies:

Not really a topic on network security however something to mention, passwords. It will go without saying that your administrators will have to have some policy in place on passwords, how long it can be, what the strength should be, etc etc. However, a topic what I would like to mention is Public Key Authentication. In this case you “do not need” a password to login to SSH. You will use a public key to authenticate yourself to the server. The good thing about this is for example that you can grant users to login as root without them knowing the password. You add a key to the user root and based upon this key users can login. So if you want to revoke the rights you do not have to change the password and inform all who still have access to this account. You can simply remove the key for this person and for the rest of the users nothing will change.

Public Key Authentication is considered much stronger than password Authentication so it is advisable to use only Key Authentication. This can also be used for for example SCP when you move (automatically) files between servers you can also make use of Key Authentication. So give this a good look.

Monitoring and sniffing:

Now we are getting a save network. However, if we want to be sure that nothing “funny” is happening you want to monitor your network with a intrusion detection system. For example Snort, snort is a opensource IDS which can monitor and detect strange behavior. This way you can monitor anomalies and possible attacks on your network.

Also it can be good to have some log analyzers ready. All your systems will provide log files. Most administrators are only looking into the log files (and the mails to root) when a problem is found. However it can be very beneficial to write your own custom log analyzer and create some portal like environment where you consolidate the log files and analyze them. You can scan for specific error messages and for example failed login attempts. Use your creativity.

So with the combination SNORT and log analyzers you can keep a good look if someone still tries to do some damage in your network.

Thursday, July 30, 2009

Crash a BIND9 server

Bind is reporting a security flaw in their bind9 distribution. It turns out that you can cause a bind server to crash remote. As bind is one of the most used DNS servers arround the world and DNS is the glue which makes the internet stick together it can be considered quite a high risk. It turns out that you can crash a server by sending it a dynamic update for a zone of which the server is the DNS master. Or as it is stated on isc.org:

Receipt of a specially-crafted dynamic update message to a zone for which the server is the master may cause BIND 9 servers to exit. Testing indicates that the attack packet has to be formulated against a zone for which that machine is a master. Launching the attack against slave zones does not trigger the assert.

This vulnerability affects all servers that are masters for one or more zones – it is not limited to those that are configured to allow dynamic updates. Access controls will not provide an effective workaround.

dns_db_findrdataset() fails when the prerequisite section of the dynamic update message contains a record of type “ANY” and where at least one RRset for this FQDN exists on the server.

db.c:659: REQUIRE(type != ((dns_rdatatype_t)dns_rdatatype_any)) failed

exiting (due to assertion failure).

Now if we take a look at the dynamic update part:

Dynamic Update is a method for adding, replacing or deleting records in a master server by sending it a special form of DNS messages. The format and meaning of these messages is specified in RFC 2136.

Dynamic update is enabled on a zone-by-zone basis, by including an allow-update or update-policy clause in the zone statement.

Updating of secure zones (zones using DNSSEC) follows RFC 3007: RRSIG and NSEC records affected by updates are automatically regenerated by the server using an online zone key. Update authorization is based on transaction signatures and an explicit server policy.

The dynamic update can be done via the nsupdate command. I will not explain on this weblog how you can crash a BIND9 machine however I can confirm the thread is real and with a little understanding of BIND and some research it is possible. I have been using a test environment to check it and if you are running a BIND9 server you might want to do dive a little deeper into this.

Saturday, February 28, 2009

DNSsec for .gov

Already some time ago that Dan Kaminsky published his exploit on DNS, by far this was one of the most shocking events for DNS admins in a long time. For those who like to understand the exploit found by Dan Kaminsky, there is a excelent guide on unixwiz.net which is a very good introduction about the DNS exploit.

To tighten the security the administrators of a .gov domain are now told to implement DNSsec to make sure the .gov domain servers will be more secure. Besides that tightening your security is always a good plan this will also be a boost for the DNSsec project. To get a good view on DNSsec you can review the video below which is a very fast introduction on DNSsec.

In very very short, the steps to create keys for DNSsec on a Linux BIND server are noted below. However, this is a very very short guide and it has a lot of open spots. You might want to look arround to find more details. I might even write a more detailed guide on this, however, for now you will have to do with this.

- Make sure you are running at least BIND 9.3, version 9.3 is the first version where you can sign your zones.

- Review your named.conf file and make sure that dnssec-enable is set to yes.

- Next, create a key for your zone, a ZSK key, (Zone Sign Key): dnssec-keygen -a RSASHA1 -b 1024 -n ZONE somedomain.com

- Create a key to sign your ZSK key: dnssec-keygen -a RSASHA1 -b 2048 -n ZONE -f KSK somedomain.com

To get all up and running you have to take some more steps, however, in this post I only want to show you how to create your keys needed for DNSsec.

Sunday, February 22, 2009

WiFi bridge, wireless repeaters

Consider the situation that you have a WiFi router and that you want one of your neighbors to make use of it, consider that his neighbor also wants to make use of your connection. Considering you do not have any problem with it this can be quite nice to start a neighborhood network. The problem you might face is that you are not able to cover the entire area with one single WiFi router. In this case you want to extend your WiFi signal in such a way that all the poeple who want to use it are able to pickup the signal. You might want to start digging and placing cables to places where you can place an other WiFi router. You also might consider to repeat your signal over several WiFi routers.

In this case you have one (or more) centralized WiFi routers which are connected to the backbone of your network from here you send your signal via WiFi to an other router which re-destributes the signal. So You will end up with some routers which do not have any cable plugged in. For example you can use the out of the box functions of a LinkSys WAP54G or WRT54G WiFi router to use it as a wireless repeater. Some great guides on how to setup a router in such a way are published so I like to point out the one I used from Nohold.net, you should check out this guide when you like to setup a wide WiFi network quite simple and fast.

Saturday, December 13, 2008

Cluster Computing Network Blueprint

For those who have been watching the lecture by Aaron Kimball from Google "cluster computing and mapreduce lecture 1" might have noticed that a big portion of the first part of the lecture is about networking and why networking is so important to distributed computing.

For those who have been watching the lecture by Aaron Kimball from Google "cluster computing and mapreduce lecture 1" might have noticed that a big portion of the first part of the lecture is about networking and why networking is so important to distributed computing."Designing real distributed systems requires consideration of networking topology."

Let say you are designing a Hadoop cluster for distributed computing for a company who will be processing lots and lots of information during the night to be able to use this information the next morning for daily business. The last thing you want is that the work is not completed during the night due to a networking problem. A failing switch can, in certain network setups, make that you loose a large portion of your computing power. Thinking about the network setup and making a good network blueprint for your system is a vital part of creating a successful solution.

We take for example the previously mentioned company. This company is working on chip research and during the day people are making new designs and algorithms which needs to be tested in your Hadoop cluster during the night. The next morning when people come in they expect to have the results of the jobs they have placed in the Hadoop queue the previous day. The number of engineers in this company is enormous and the cluster has a use of around 98% during 'non working hours' and 80% during working hours. As you can see a failure of the entire system or a reduction of the computing power will have a enormous impact on the daily work of all the engineers.

A closer look at this theoretical cluster.

- The cluster consists out of 960 computing nodes.

- A node is a 1u server with 2 quad core processors and 6 gigabyte of ram.

- The nodes are placed in 19' racks every rack houses 24 computing nodes.

- There are 40 racks with computing nodes.

As you can see, if we would lose a entire rack due to a network failure we would lose 2.5% of the computing power. As the cluster is used 98% during the night we would not have enough computing power to do all the work during the night. Losing a single node will not be a problem, however losing a stack of nodes will result in a major problem during the next day. For this we will have to create a network blueprint in which we can ensure that we will not lose a entire computing stack. If we talk about a computing stack we talk about a rack of 24 servers in this example.

First we will have to take a look at how we will connect the racks, if you look at the Cisco website you will be able to find the Cisco Catalyst 3560 product page. The Cisco Catalyst 3560 has 4 Fiber ports and we will be using those switches to connect the computing stacks. However, to ensure the network redundancy we will use 2 switches for very stack instead of one. As you can see in the diagram below we crosslink the switches. SW-B0 and SW-B1 will both be handling computing stack B, switches SW-C0 and SW-C1 will be handling the network load for computing stack C etc etc. We will be connecting SW-B0 with fiber to SW-C0 and SW-C1. We will also connect SW-B1 with fiber to SW-C0 and SW-C1. In case SW-B0 or SW-B1 will fail the network can still route traffic to the the switches in the B computing stack and also the a computing stack. By creating the network in this way the network will not fail to route traffic to the other stacks, the only thing that will happen is that the surviving switch will have to handle more load.

This setup will however not solve the problem that the nodes that are connected to the failing switch will loose the network connection. To resolve this we will attache every node to two switches. Every computing stack has 24 computing nodes. The switch has 48 ports and we do have 2 switches. To solve this problem we place 2 network interfaces in every node. One will be in standby mode and one will be active. To spread the load at all even numbered nodes the active NIC will be connected to switch 0, at all uneven numbered nodes the active NIC will be connected switch 1. For the inactive (standby) NIC, all the even numbered nodes will be connected to switch 1 and all uneven numbered nodes will be connected to switch 0. In a normal situation the load will be balanced between the two switches, in case one of the two switches fails the standby NIC's will have to become active and all the network traffic to the nodes in the computing stack will be handled by the surviving switch.

This setup will however not solve the problem that the nodes that are connected to the failing switch will loose the network connection. To resolve this we will attache every node to two switches. Every computing stack has 24 computing nodes. The switch has 48 ports and we do have 2 switches. To solve this problem we place 2 network interfaces in every node. One will be in standby mode and one will be active. To spread the load at all even numbered nodes the active NIC will be connected to switch 0, at all uneven numbered nodes the active NIC will be connected switch 1. For the inactive (standby) NIC, all the even numbered nodes will be connected to switch 1 and all uneven numbered nodes will be connected to switch 0. In a normal situation the load will be balanced between the two switches, in case one of the two switches fails the standby NIC's will have to become active and all the network traffic to the nodes in the computing stack will be handled by the surviving switch.

To have the NIC's to switch to the surviving network switch and to make sure that operation continue as normal you will have to make sure that the network keeps looking at the servers in the same way as before the moment one of the switches failed. To do this you will have to make sure that the new NIC has the same IP address and MAC address. To do so you can make use of IPAT, IP Address Takeover.

"IP address takeover feature is available on many commercial clusters. This feature protects an installation against failures of the Network Interface Cards (NICs). In order to make this mechanism work, installations must have two NICs for each IP address assigned to a server. Both the NICs must be connected to the same physical network. One NIC is always active while the other is in a standby mode. The moment the system detects a problem with the main adapter, it immediately fails over to the standby NIC. Ongoing TCP/IP connections are not disturbed and as a result clients do not notice any downtime on the server. "

Now we have tackled almost every possible breakdown, however what can happen is that not one switch but both switches in stack break. If we look at the examples above this would mean that the stacks will be separated by the broken stack. To prevent this you will have to make a connection between the first and the last stack as you make between all the stack. By doing so you make a 'ring' of your network. With correct setup of all your switches and making good routing a failover routings your network can also handle the malfunction of a complete stack in combination with both switches in the stack.

Now we have tackled almost every possible breakdown, however what can happen is that not one switch but both switches in stack break. If we look at the examples above this would mean that the stacks will be separated by the broken stack. To prevent this you will have to make a connection between the first and the last stack as you make between all the stack. By doing so you make a 'ring' of your network. With correct setup of all your switches and making good routing a failover routings your network can also handle the malfunction of a complete stack in combination with both switches in the stack.Even do this is a theoretical blueprint, developing your network in such a way in combination with writing your own code to controle network flows, scripts to control the IPAT and the switching back of IPAT, thinking about reporting and alerting mechanisms will make a very solid network. If there are any questions about this networking blueprint for cluster computing please do send me a e-mail or post a comment. I will reply with a answer (good or bad) or explain things in more detail in a new post.

Sunday, December 07, 2008

Macbook and wifi

I recently purchased a new macbook pro, this resulted in an 'old' macbook hanging around my desk without being used for some time. As my girlfriend is still working on a old laptop I purchased a couple of years back she was interested in using my old macbook. One of the things however she did not like was the default operating system on my macbook. She wanted to run Linux on it.

I recently purchased a new macbook pro, this resulted in an 'old' macbook hanging around my desk without being used for some time. As my girlfriend is still working on a old laptop I purchased a couple of years back she was interested in using my old macbook. One of the things however she did not like was the default operating system on my macbook. She wanted to run Linux on it.Installation of ubuntu on a macbook is very very simple, just take the normal steps of installing ubuntu. however the wifi drivers is a story on its self. If you are installing Ubuntu on a macbook.... don't do what i did, just follow the guide of installing ath9k driver and you will be ready to go. It is part of a ubuntu howto on installing on a macbook.

You can install the ath9k drivers, you can also make wifi on a macbook with ubuntu working with madwifi or a ndiswrapper. it is all in the guide so just follow the guide and you will be able to run ubuntu on a macbook.

Saturday, November 29, 2008

find subdomains of a domain

You might find yourself in a situation that you would like to know all the listed sub-domains in a domain. If you are the administrator of the DNS server you will not have much trouble of finding the information, if you are not the administrator of the server you can have a hard time finding out the sub-domains for a domain.

You might find yourself in a situation that you would like to know all the listed sub-domains in a domain. If you are the administrator of the DNS server you will not have much trouble of finding the information, if you are not the administrator of the server you can have a hard time finding out the sub-domains for a domain.There is a 'trick' you can use to make the domain server tell you what the sub-domains for a domain are. This 'trick' is however not always working. If the domain server you are talking to allows DNS zone transfer and it is allowing this information to be send to all IP addresses that request is you are in luck. A DNS zone transfer is used to update slave DNS servers from the master DNS server.

When you operate several DNS servers you do not want to update them all when you are making a change. In a ideal situation you update the master server and the slave servers do a request to the master server every X time to be updates in. In some cases the administrator of the DNS servers has not set a limitation to who can request those updates. This will mean that you also can request a update.

A quick example, we take the domain knmi.nl which is the Dutch meteorology institute. First we would like to know the authoritive nameserver of the domain so we do a 'dig knmi.nl' at a linux shell:

jlouwers$ dig knmi.nl

; <<>> DiG 9.4.2-P2 <<>> knmi.nl

;; global options: printcmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 24089 ;; flags: qr rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 0 ;; QUESTION SECTION: ;knmi.nl. IN A ;; AUTHORITY SECTION: knmi.nl. 4521 IN SOA styx3.knmi.nl. postmaster.styx3.knmi.nl. 2008112601 14400 1800 3600000 86400 ;; Query time: 18 msec ;; SERVER: 212.54.40.25#53(212.54.40.25) ;; WHEN: Sat Nov 29 12:32:55 2008 ;; MSG SIZE rcvd: 78 jlouwers$ jlouwers$ jlouwers$

"dig (domain information groper) is a flexible tool for interrogating DNS name servers. It performs DNS lookups and displays the answers that are returned from the name server(s) that were queried. Most DNS administrators use dig to troubleshoot DNS problems because of its flexibility, ease of use and clarity of output. Other lookup tools tend to have less functionality than dig."

So now we know the authoritive nameserver of the domain knmi.nl, you can find it under ";; AUTHORITY SECTION:", so we will be asking the styx3.knmi.nl for all the subdomains listed for this domain. This can be done with the a command like 'dig @styx3.knmi.nl knmi.nl axfr'. With this command we ask styx3.knmi.nl for all records for knmi.nl with a axfr command, axfr is the zone transfer command.

jlouwers$

jlouwers$

jlouwers$ dig @styx3.knmi.nl knmi.nl axfr

; <<>> DiG 9.4.2-P2 <<>> @styx3.knmi.nl knmi.nl axfr

; (1 server found)

;; global options: printcmd

knmi.nl. 86400 IN SOA styx3.knmi.nl. postmaster.styx3.knmi.nl. 2008112601 14400 1800 3600000 86400

knmi.nl. 86400 IN MX 5 birma1.knmi.nl.

knmi.nl. 86400 IN MX 10 birma2.knmi.nl.

knmi.nl. 86400 IN NS ns1.surfnet.nl.

knmi.nl. 86400 IN NS ns3.surfnet.nl.

knmi.nl. 86400 IN NS styx3.knmi.nl.

*.knmi.nl. 86400 IN MX 5 birma1.knmi.nl.

*.knmi.nl. 86400 IN MX 10 birma2.knmi.nl.

adaguc.knmi.nl. 86400 IN CNAME www.knmi.nl.

alvtest.knmi.nl. 86400 IN A 145.23.254.224

bbc.knmi.nl. 86400 IN CNAME bgwcab.knmi.nl.

bcrpwt.knmi.nl. 86400 IN A 145.23.254.127

bdyis1.knmi.nl. 86400 IN CNAME www3.knmi.nl.

bdypwt.knmi.nl. 86400 IN A 145.23.254.159

bemc.knmi.nl. 86400 IN CNAME styx3.knmi.nl.

besarv.knmi.nl. 86400 IN A 145.23.254.219

bgoftp.knmi.nl. 300 IN A 145.23.254.254

bgwcab.knmi.nl. 86400 IN A 145.23.254.228

bhlbpaaa01.knmi.nl. 300 IN A 145.23.253.111

bhlbpaaa02.knmi.nl. 300 IN A 145.23.253.112

birma1.knmi.nl. 86400 IN A 145.23.254.201

birma2.knmi.nl. 86400 IN A 145.23.254.202

bswor1.knmi.nl. 86400 IN A 145.23.4.1

bswor3.knmi.nl. 86400 IN A 145.23.254.243

bswor4.knmi.nl. 86400 IN A 145.23.254.244

bswso1.knmi.nl. 86400 IN A 145.23.16.25

cesar-database.knmi.nl. 86400 IN A 145.23.253.206

charon1.knmi.nl. 86400 IN A 145.23.254.151

charon2.knmi.nl. 86400 IN A 145.23.254.152

charon3.knmi.nl. 86400 IN A 145.23.254.153

climexp.knmi.nl. 300 IN A 145.23.253.225

cloudnet.knmi.nl. 86400 IN CNAME bgwcab.knmi.nl.

codex.knmi.nl. 86400 IN CNAME hades.knmi.nl.

eca.knmi.nl. 300 IN A 145.23.253.211

ecaccess.knmi.nl. 86400 IN CNAME styx1.knmi.nl.

ecad.knmi.nl. 86400 IN CNAME eca.knmi.nl.

ecadev.knmi.nl. 86400 IN A 145.23.254.218

ecearth.knmi.nl. 86400 IN CNAME climexp.knmi.nl.

esr.knmi.nl. 86400 IN A 145.23.254.216

ftp.knmi.nl. 86400 IN CNAME ftpgig.knmi.nl.

ftp2.knmi.nl. 86400 IN A 145.23.253.247

ftpgig.knmi.nl. 300 IN A 145.23.253.248

ftpmeteo.knmi.nl. 86400 IN A 145.23.254.54

ftppro.knmi.nl. 300 IN A 145.23.253.245

gate.knmi.nl. 86400 IN CNAME hades.knmi.nl.

gate1.knmi.nl. 86400 IN CNAME hades.knmi.nl.

gate2.knmi.nl. 86400 IN A 145.23.254.252

gate2p1.knmi.nl. 86400 IN CNAME charon1.knmi.nl.

gate2p2.knmi.nl. 86400 IN CNAME charon2.knmi.nl.

gate2p4.knmi.nl. 86400 IN CNAME webmail.knmi.nl.

gate3.knmi.nl. 86400 IN A 145.23.254.253

gate6.knmi.nl. 86400 IN A 145.23.254.236

geoservices.knmi.nl. 86400 IN A 145.23.253.210

hades.knmi.nl. 86400 IN A 145.23.254.158

hades1.knmi.nl. 86400 IN A 145.23.254.213

hexnet.knmi.nl. 86400 IN A 145.23.254.221

hl.knmi.nl. 86400 IN CNAME hexnet.knmi.nl.

hug.knmi.nl. 86400 IN CNAME hexnet.knmi.nl.

kd.knmi.nl. 300 IN A 145.23.253.193

kodac.knmi.nl. 300 IN A 145.23.253.131

localhost.knmi.nl. 86400 IN A 127.0.0.1

lvxtest.knmi.nl. 86400 IN A 145.23.254.227

maris.knmi.nl. 300 IN A 145.23.253.132

nadc-virt.knmi.nl. 86400 IN A 145.23.254.48

nadc01.knmi.nl. 86400 IN A 145.23.254.50

nadc02.knmi.nl. 86400 IN A 145.23.254.49

nadcorders.knmi.nl. 300 IN A 145.23.240.221

namis.knmi.nl. 86400 IN A 145.23.253.246

neonet.knmi.nl. 86400 IN A 145.23.254.222

neries.knmi.nl. 86400 IN A 145.23.254.250

newalv.knmi.nl. 86400 IN A 145.23.254.24

newhirlam.knmi.nl. 86400 IN A 145.23.254.106

newlvx.knmi.nl. 86400 IN A 145.23.254.25

newshost.knmi.nl. 86400 IN CNAME gate2.knmi.nl.

ns.knmi.nl. 86400 IN CNAME styx3.knmi.nl.

opmet.knmi.nl. 86400 IN CNAME newalv.knmi.nl.

orfeus.knmi.nl. 86400 IN CNAME neries.knmi.nl.

orfeustest.knmi.nl. 86400 IN CNAME bswor4.knmi.nl.

overheid.knmi.nl. 300 IN A 145.23.253.238

overheid-test.knmi.nl. 86400 IN A 145.23.253.237

portal.knmi.nl. 86400 IN A 145.23.254.191

sorry.knmi.nl. 86400 IN A 145.23.253.252

styx1.knmi.nl. 86400 IN A 145.23.254.238

styx2.knmi.nl. 86400 IN A 145.23.254.159

styx3.knmi.nl. 86400 IN A 145.23.254.155

swe.knmi.nl. 86400 IN CNAME styx3.knmi.nl.

test.knmi.nl. 86400 IN CNAME bcrpwt.knmi.nl.

testaccess.knmi.nl. 86400 IN A 145.23.254.195

trajks.knmi.nl. 86400 IN CNAME climexp.knmi.nl.

wap.knmi.nl. 86400 IN CNAME www.knmi.nl.

webaccess.knmi.nl. 86400 IN A 145.23.254.190

webmail.knmi.nl. 86400 IN A 145.23.254.154

www.knmi.nl. 300 IN A 145.23.253.254

www3.knmi.nl. 86400 IN A 145.23.254.200

knmi.nl. 86400 IN SOA styx3.knmi.nl. postmaster.styx3.knmi.nl. 2008112601 14400 1800 3600000 86400

;; Query time: 19 msec

;; SERVER: 145.23.254.155#53(145.23.254.155)

;; WHEN: Sat Nov 29 12:39:04 2008

;; XFR size: 95 records (messages 1, bytes 2169)

jlouwers$

jlouwers$

jlouwers$

This is how you can get a complete list of all subdomains listed at a domain server. However, this will only work in cases that a domain server is allowing you to request a zone transfer.

Thursday, October 09, 2008

Cisco PCF files

I was recently asked to prep some vpn profiles files for a customer as I have been playing around with Cisco PIX firewalls. Playing with a Cisco PIX firewall is NOT an indication that I know all about it and that I know all about the cisco pcf file format. However I found out that a .pcf file a flat text file you can modify with vi to your licking. A basic file looks like this the one below. All you have to know is what the meaning is of every line and you can create a .pcf file.

I was recently asked to prep some vpn profiles files for a customer as I have been playing around with Cisco PIX firewalls. Playing with a Cisco PIX firewall is NOT an indication that I know all about it and that I know all about the cisco pcf file format. However I found out that a .pcf file a flat text file you can modify with vi to your licking. A basic file looks like this the one below. All you have to know is what the meaning is of every line and you can create a .pcf file.

Description=some-name

!Host=10.20.30.40

!AuthType=1

!GroupName=

!GroupPwd=

!enc_GroupPwd=

EnableISPConnect=0

ISPConnectType=0

ISPConnect=

ISPCommand=

Username=

SaveUserPassword=0

UserPassword=

enc_UserPassword=

!NTDomain=

!EnableBackup=0

!BackupServer=

!EnableMSLogon=1

!MSLogonType=0

!EnableNat=1

!TunnelingMode=0

!TcpTunnelingPort=10000

CertStore=0

CertName=

CertPath=

CertSubjectName=

CertSerialHash=00000000000000000000000000000000

SendCertChain=0

VerifyCertDN=

DHGroup=2

ForceKeepAlives=1

PeerTimeout=90

!EnableLocalLAN=0

!EnableSplitDNS=1

ISPPhonebook=

So a short explanation of the main options you have in a pcf file.

Description

The Description is a string of maximum 246 alphanumeric characters describing the use of the VPN connection

Host

The Host line is used to provide a IP address of the VPN server/device or the domain name. Max 255 alphanumeric characters!

AuthType

The AuthType will define the way the user is athenticated against the server/device. 1 = Pre-shared keys (default)

3 = Digital Certificate using an RSA signature. 5 = Mutual authentication

GroupName

The name of the IPSec group that contains this user. Used with pre-shared keys. The exact name of the IPSec group configured on the VPN central-site device. Maximum 32 alphanumeric characters. Case-sensitive.

GroupPwd

Group Password. The password for the IPSec group that contains this user. Used with pre-shared keys. The first time the VPN Client reads this password, it replaces it with an encypted one (enc_GroupPwd). The exact password for the IPSec group configured on the VPN central-site device. Minimum of 4, maximum 32 alphanumeric characters. Case-sensitive clear text.

encGroupPwd

The password for the IPSec group that contains the user. Used with pre-shared keys. This is the scrambled version of the GroupPwd. Binary data represented as alphanumeric text.

EnableISPConnect

Connect to the Internet via Dial-Up Networking. Specifies whether the VPN Client automatically connects to an ISP before initiating the IPSec connection; determines whether to use PppType parameter. 0 = ISPConnect (default) 1 = ISPCommand. The VPN Client GUI ignores a read-only setting on this parameter.

ISPConnect

Dial-Up Networking Phonebook Entry (Microsoft). Use this parameter to dial into the Microsoft network; dials the specified dial-up networking phone book entry for the user's connection. Applies only if EnableISPconnect=1 and ISPConnectType=0.

ISPCommand

Dial-Up Networking Phonebook Entry (command). Use this parameter to specify a command to dial the user's ISP dialer. Applies only if EnableISPconnect=1 and ISPConnectType=1. Command string: This variable includes the pathname to the command and the name of the command complete with arguments; for example: "c:\isp\ispdialer.exe dialEngineering" Maximum 512 alphanumeric characters.

Username

User Authentication: Username. The name that authenticates a user as a valid member of the IPSec group specified in GroupName. The exact username. Case-sensitive, clear text, maximum of 32 characters. The VPN Client prompts the user for this value during user authentication.

UserPassword

User Authentication: Password. The password used during extended authentication. The first time the VPN Client reads this password, it saves it in the file as the enc_UserPassword and deletes the clear-text version. If SaveUserPassword is disabled, then the VPN Client deletes the UserPassword and does not create an encrypted version. You should only modify this parameter manually if there is no GUI interface to manage profiles.

encUserPassword

Scrambled version of the user's password

SaveUserPassword

Determines whether or not the user password or its encrypted version are valid in the profile. This value is pushed down from the VPN central-site device. 0 = (default) do not allow user to save password information locally. 1 = allow user to save password locally.

NTDomain

User Authentication: Domain. The NT Domain name configured for the user's IPSec group. Applies only to user authentication via a Windows NT Domain server. Maximum 14 alphanumeric characters. Underbars are not allowed.

EnableBackup

Enable backup server(s) specifies whether to use backup servers if the primary server is not available. 0 = Disable (default) 1 = Enable.

BackupServer

(Backup server list). List of hostnames or IP addresses of backup servers. Applies only if EnableBackup=1. Legitimate Internet hostnames, or IP addresses in dotted decimal notation. Separate multiple entries by commas. Maximum of 255 characters in length.

EnableMSLogon

Logon to Microsoft Network. Specifies that users log on to a Microsoft network.Applies only to systems running Windows 9x. 0 = Disable 1 = Enable (Default)

MSLogonType

Use default system logon credentials. Prompt for network logon credentials. Specifies whether the Microsoft network accepts the user's Windows username and password for logon, or whether the Microsoft network prompts for a username and password. Applies only if EnableMSLogon=1. 0 = (default) Use default system logon credentials; i.e., use the Windows logon username and password. 1 = Prompt for network logon username and password.

EnableNat

Enable Transparent Tunneling. Allows secure transmission between the VPN Client and a secure gateway through a router serving as a firewall, which may also be performing NAT or PAT. 0 = Disable 1 = Enable (default)

TunnelingMode

Specifies the mode of transparent tunneling, over UDP or over TCP; must match that used by the secure gateway with which you are connecting. 0 = UDP (default)1 = TCP

TCPTunnelingPort

Specifies the TCP port number, which must match the port number configured on the secure gateway. Port number from 1 through 65545 Default = 10000

EnableLocalLAN

Allow Local LAN Access. Specifies whether to enable access to resources on a local LAN at the Client site while connected through a secure gateway to a VPN device at a central site. 0 = Disable (default) 1 = Enable

PeerTimeout

Peer response time-out The number of seconds to wait before terminating a connection because the VPN central-site device on the other end of the tunnel is not responding. Number of seconds Minimum = 30 seconds Maximum = 480 seconds Default = 90 seconds

CertStore

Certificate Store. Identifies the type of store containing the configured certificate. 0 = No certificate (default) 1 = Cisco 2 = Microsoft The VPN Client GUI ignores a read-only (!) setting on this parameter.

CertName

Certificate Name. Identifies the certificate used to connect to a VPN central-site device. Maximum 129 alphanumeric characters The VPN Client GUI ignores a read-only setting on this parameter.

CertPath

The complete pathname of the directory containing the certificate file. Maximum 259 alphanumeric characters The VPN Client GUI ignores a read-only setting on this parameter.

CertSubjectName

The fully qualified distinguished name (DN) of certificate's owner. If present, the VPN Dialer enters the value for this parameter. Either do not include this parameter or leave it blank. The VPN Client GUI ignores a read-only setting on this parameter.

CertSerialHash

A hash of the certificate's complete contents, which provides a means of validating the authenticity of the certificate. If present, the VPN Dialer enters the value for this parameter. Either do not include this parameter or leave it blank. The VPN Client GUI ignores a read-only setting on this parameter.

SendCertChain

Sends the chain of CA certificates between the root certificate and the identity certificate plus the identity certificate to the peer for validation of the identity certificate. 0 = disable (default) 1 = enable

VerifyCertDN

Prevents a user from connecting to a valid gateway by using a stolen but valid certificate and a hijacked IP address. If the attempt to verify the domain name of the peer certificate fails, the client connection also fails.

DHGroup

Allows a network administrator to override the default group value on a VPN device used to generate Diffie- Hellman key pairs.

RadiusSDI

Tells the VPN Client to assume that Radius SDI is being used for extended authentication (XAuth).

SDIUseHardwareToken

Enables a connection entry to avoid using RSA SoftID software.

EnableSplitDNS

Determines whether the connection entry is using splitDNS, which can direct packets in clear text over the Internet to domains served through an external DNS or through an IPSec tunnel to domains served by a corporate DNS. This feature is configured on the VPN 3000 Concentrator and is used in a split-tunneling connection.

UseLegacyIKEPort

Changes the default IKE port from 500/4500 to dynamic ports to be used during all connections. You must explicitly enter this parameter into the .pcf file.

ForceNetlogin

(windows-only) Enables the Force Net Login feature for this connection profile.