Oracle was already using the java programming language intensive in its products however it is getting in full swing in the last couple of years. After they have acquired BEA and have used it to build the weblogic application server and after they acquired Sun you see that java is becoming the main application language for Oracle.

We have seen recently some legal battles between Google and Oracle about parts of Java and this is showing that Oracle is willing to go into battle mode for Java. However this is not meaning that they want to make java as proprietary as possible. One of the latest cases showing this is the release of ADF Essentials. ADF is the Oracle Application Development framework which is a proprietary development framework build by Oracle to support the development community and which is also used heavily by Oracle development teams internally for product development. One of the things about ADF is that you need theOracle weblogic server to run it (officially). This automatically rules out that ADF can be used to create open source products and it is limiting the adoption of ADF as you have a vendor lock with Oracle with the application server.

Now Oracle has launched ADF Essentials which is able to run not only on weblogic, it can also run on for example GlassFish or other application servers like for example IBM WebSphere. ADF Essentials can be used for free and you no longer have a vendor lock on the application server. ADF Essentials is a stripped down version of the commercial ADF version.

ADF Essentials is important for Oracle as they hope to boost the adoption of ADF with it, not only the Essentials version but also the commercial version. The commercial vision of Oracle behind Essentials is (most likely) that it will make that ADF will get more popular and that more developers will start developing in a ADF way and by doing so not only make the product base bigger for ADF Essentials, it should also make the adoption of the commercial version of ADF bigger and by doing so boost up the license revenue coming from the sales of WebLogic licenses.

If you are a company who will be building applications based upon Oracle ADF Essentials it is good to take some note of the ADF Essentials license policy. If we take a look at the ADF essentials license policy we note the following;

You are bound by the Oracle Technology Network ("OTN") License Agreement terms. The OTN License Agreement terms also apply to all updates you receive under your Technology Track subscription.

this means you will have to review the OTN License Agreement in debt to be sure what you can and cannot do with ADF Essentials from a legal point of view. Most important to know is the following: Oracle ADF Essentials is a free to develop and deploy packaging of the key core technologies of Oracle ADF.

The personal view on the IT world of Johan Louwers, specially focusing on Oracle technology, Linux and UNIX technology, programming languages and all kinds of nice and cool things happening in the IT world.

Tuesday, October 30, 2012

Monday, October 29, 2012

Oracle database firewall

In a recent blogpost I touched the subject of deep application boundary validation and made a statement about the fact that when you implement deep application boundary validation you should not only look at the software created on an application level, you should also look at a database level and make sure that all functions, stored procedures etc etc are equipped with a boundary validation part. In essence this comes down to the fact that every package or function should hold code to validate the incoming data. Incoming data is commonly parameters used to call the code however can also be the values coming from a return set from a select statement. When implementing boundary validation you should think of everything coming into your code as data (parameter, return sets...) are coming from an unused source and are in need of heckling if it is valid.

The example I used in the previous blogpost was that of a web application. Another good way to strengthen the security of your application and to protect your database is to implement an Oracle database firewall. One of the interesting features of the Oracle database firewall is the option for white-listing.

What white-listing is essence is doing is creating a list of SQL statements which you consider to be safe and which can be executed from the application tier on the database tier. for example if you have build an application to track shipments this application will hold a certain number of queries that can be executed against the database (all with different values, for example the tracking number). Those queries are considered safe and can be placed on the whitelist.

When a query is executed by the application server and send to the Oracle database the database firewall will. Check the query against the whitelist. If the query is on the whitelist it will allow the query to pass to the database server to be executed, in case the query is not on the whitelist the database firewall will drop the query and it will not be send to the database server.

Implementing a oracle database firewall will help you protect against, for example, SQL injection attacks against your application server. When an attacker finds a way to insert additional SQL code into your application via the GUI the statement will never reach the database server, the statement will be dropped by the Oracle database firewall.

Secondly it will protect you against attacks which make use of stolen credentials from the application server or a compromised application server. Your application server will need login credentials to your database server to be able to communicate. In case someone is able to steal the login credentials from the application server they would be able to connect to the database and start freely querying the data. When you have a Oracle database firewall in place all statements that are not on the whitelist will be dropped and by doing so the attacker having the database login credentials from your application server be very limited in his attempt to exploit this.

There are however some more nice features about the Oracle database firewall. We have just stated you can drop (block) a statement to be send to the database however there are some more options you can do. You will have the allow options and the block options, however you also have; log, alert and substitute.

Log is quite straight forward, a log entry will be made stated a certain statement has been send and executed. This can be handy in case you do want certain actions to be logged for example in cases where you have to be able to trace certain things or you have to be compliant with a certain legal or internal rule.

Alert will send a alert directly to a system, this is handy in case you are tracking a certain behaviour or when you any other need for alerting where we will not go into at this moment in this post.

Specially interesting is the substitute, substitute will allow you to catch a certain statement and rewrite it with another. This can be for example if you catch a certain attack characteristic and you do not want to block the attacker however you want to feed him with incorrect information or in some cases this can be handy to trick packaged software to which you do not have the source code to interact in a different way with your database. This can also be used (however not promoted) to port a application written for one database version (or vendor) to another.

The example I used in the previous blogpost was that of a web application. Another good way to strengthen the security of your application and to protect your database is to implement an Oracle database firewall. One of the interesting features of the Oracle database firewall is the option for white-listing.

What white-listing is essence is doing is creating a list of SQL statements which you consider to be safe and which can be executed from the application tier on the database tier. for example if you have build an application to track shipments this application will hold a certain number of queries that can be executed against the database (all with different values, for example the tracking number). Those queries are considered safe and can be placed on the whitelist.

When a query is executed by the application server and send to the Oracle database the database firewall will. Check the query against the whitelist. If the query is on the whitelist it will allow the query to pass to the database server to be executed, in case the query is not on the whitelist the database firewall will drop the query and it will not be send to the database server.

Implementing a oracle database firewall will help you protect against, for example, SQL injection attacks against your application server. When an attacker finds a way to insert additional SQL code into your application via the GUI the statement will never reach the database server, the statement will be dropped by the Oracle database firewall.

Secondly it will protect you against attacks which make use of stolen credentials from the application server or a compromised application server. Your application server will need login credentials to your database server to be able to communicate. In case someone is able to steal the login credentials from the application server they would be able to connect to the database and start freely querying the data. When you have a Oracle database firewall in place all statements that are not on the whitelist will be dropped and by doing so the attacker having the database login credentials from your application server be very limited in his attempt to exploit this.

There are however some more nice features about the Oracle database firewall. We have just stated you can drop (block) a statement to be send to the database however there are some more options you can do. You will have the allow options and the block options, however you also have; log, alert and substitute.

Log is quite straight forward, a log entry will be made stated a certain statement has been send and executed. This can be handy in case you do want certain actions to be logged for example in cases where you have to be able to trace certain things or you have to be compliant with a certain legal or internal rule.

Alert will send a alert directly to a system, this is handy in case you are tracking a certain behaviour or when you any other need for alerting where we will not go into at this moment in this post.

Specially interesting is the substitute, substitute will allow you to catch a certain statement and rewrite it with another. This can be for example if you catch a certain attack characteristic and you do not want to block the attacker however you want to feed him with incorrect information or in some cases this can be handy to trick packaged software to which you do not have the source code to interact in a different way with your database. This can also be used (however not promoted) to port a application written for one database version (or vendor) to another.

Sunday, October 28, 2012

Deep application boundary validation

When developing an application security is (should) always be a point of attention. Unfortunately security is commonly a point of attention that is addressed when architects discuss the implementation of the application. At that stage security comes into play when people are looking at how to put in firewalls, host hardening, database security and communication with other servers and services. These are all valid points of attention and do need the appropriate attention however security should be a point of consideration in a much earlier stage of the process.

Boundary validation is a method used to validate input coming in from an untreated source into a trusted source. A simple example of boundary validation (and one that is commonly done) is the validation of input given by a user into an application. If we take for example a web based application the input from the user coming from the Internet or intranet is considered untrusted and is validated when crossing the boundary from the untrusted user space into the trusted server side scripting.

This form of boundary validation is commonly done and implemented in a lot of (web based-) applications. Even though this is a good approach this is in many cases not enough. Even for the most simple application this can possibly not be enough. As we are using object orientated programming in most cases one function calls another function to perform some actions, by having functions calling functions (calling functions) it is very likely that if you implement only a boundary validation when receiving the data from the user that is can potentially cause a security risk in one of the other functions.

As an example, during boundary validation of a login function it is checked if the input on a order entry screen against the rules for the order entry screen it can be very well that you have secured against the input of malicious data for this specific function however as the user input is commonly used as input for all other kind of functions it might be that some input that is considerd not malicious for the order entry screen can be triggering a vulnerability in a total different function somewhere down the line. This might result in an attacker gaining acces to data or functionality somewhere else in your application which was not the intention of the developer.

To prevent such an attack it is advisable to not only consider data coming into the application as a whole to consider unsafe however considering all data passed between functions to consider as unsafe. This holds that you will have to do boundary checking at every function and not only the functions exposed to the user. This also holds that you cannot do boundary checking only at the application server, you will also have to make sure boundary checking is done in for, for example, all your database procedures and functions.

By implementing such a model you will harden your application even more against attacks. Implementing such a strategy is causing more work to be done during coding however this form of attack, even though it less commonly know, is quite regularly used by attackers to gain access to systems and data.

Wednesday, October 24, 2012

Oracle database session memory usage

Oracle databases are commonly used as the backbone of large applications and can have a lot of concurrent sessions connecting to them. Every session has its own characteristics and their own resource usage. When your database is experiencing a situation in which a lot of memory is used and you want to check where this memory is used it can be good to see which session is using what amount of memory. Investigating how much memory your database is consuming is quite straight forward however tracking which session is using what specific amount of memory can be a bit more tricky.

Oracle has provided some guidance in checking memory usage per session in your database in metalink note 239846.1 . Here they provide a script which can be used.

Your output will look something like this

The script is not part of the official Oracle support scripting and is not an official Oracle script. You can check the latest version of it on Metalink or you can find it below:

Oracle has provided some guidance in checking memory usage per session in your database in metalink note 239846.1 . Here they provide a script which can be used.

Your output will look something like this

SESSION PID/THREAD CURRENT SIZE MAXIMUM SIZE ---------------------------------------- ---------- ---------------- ---------------- 9 - SYS: myworkstation 2258 10.59 MB 10.59 MB 3 - LGWR: testserver 2246 5.71 MB 5.71 MB 2 - DBW0: testserver 2244 2.67 MB 2.67 MB

The script is not part of the official Oracle support scripting and is not an official Oracle script. You can check the latest version of it on Metalink or you can find it below:

REM ============================================================================= REM ************ SCRIPT TO MONITOR MEMORY USAGE BY DATABASE SESSIONS ************ REM ============================================================================= REM Created: 21/march/2003 REM Last update: 28/may/2003 REM REM NAME REM ==== REM MEMORY.sql REM REM AUTHOR REM ====== REM Mauricio Buissa REM REM DISCLAIMER REM ========== REM This script is provided for educational purposes only. It is NOT supported by REM Oracle World Wide Technical Support. The script has been tested and appears REM to work as intended. However, you should always test any script before REM relying on it. REM REM PURPOSE REM ======= REM Retrieves PGA and UGA statistics for users and background processes sessions. REM REM EXECUTION ENVIRONMENT REM ===================== REM SQL*Plus REM REM ACCESS PRIVILEGES REM ================= REM Select on V$SESSTAT, V$SESSION, V$BGPROCESS, V$PROCESS and V$INSTANCE. REM REM USAGE REM ===== REM $ sqlplus "/ as sysdba" @MEMORY REM REM INSTRUCTIONS REM ============ REM Call MEMORY.sql from SQL*Plus, connected as any DBA user. REM Presswhenever you want to refresh information. REM You can change the ordered column and the statistics shown by choosing from the menu. REM Spool files named MEMORY_YYYYMMDD_HH24MISS.lst will be generated in the current directory. REM Every time you refresh screen, a new spool file is created, with a snapshot of the statistics shown. REM These snapshot files may be uploaded to Oracle Support Services for future reference, if needed. REM REM REFERENCES REM ========== REM "Oracle Reference" - Online Documentation REM REM SAMPLE OUTPUT REM ============= REM :::::::::::::::::::::::::::::::::: PROGRAM GLOBAL AREA statistics ::::::::::::::::::::::::::::::::: REM REM SESSION PID/THREAD CURRENT SIZE MAXIMUM SIZE REM -------------------------------------------------- ---------- ------------------ ------------------ REM 9 - SYS: myworkstation 2258 10.59 MB 10.59 MB REM 3 - LGWR: testserver 2246 5.71 MB 5.71 MB REM 2 - DBW0: testserver 2244 2.67 MB 2.67 MB REM ... REM REM :::::::::::::::::::::::::::::::::::: USER GLOBAL AREA statistics :::::::::::::::::::::::::::::::::: REM REM SESSION PID/THREAD CURRENT SIZE MAXIMUM SIZE REM -------------------------------------------------- ---------- ------------------ ------------------ REM 9 - SYS: myworkstation 2258 0.29 MB 0.30 MB REM 5 - SMON: testserver 2250 0.06 MB 0.06 MB REM 4 - CKPT: testserver 2248 0.05 MB 0.05 MB REM ... REM REM SCRIPT BODY REM =========== REM Starting script execution CLE SCR PROMPT . PROMPT . ======== SCRIPT TO MONITOR MEMORY USAGE BY DATABASE SESSIONS ======== PROMPT . REM Setting environment variables SET LINESIZE 200 SET PAGESIZE 500 SET FEEDBACK OFF SET VERIFY OFF SET SERVEROUTPUT ON SET TRIMSPOOL ON COL "SESSION" FORMAT A50 COL "PID/THREAD" FORMAT A10 COL " CURRENT SIZE" FORMAT A18 COL " MAXIMUM SIZE" FORMAT A18 REM Setting user variables values SET TERMOUT OFF DEFINE sort_order = 3 DEFINE show_pga = 'ON' DEFINE show_uga = 'ON' COL sort_column NEW_VALUE sort_order COL pga_column NEW_VALUE show_pga COL uga_column NEW_VALUE show_uga COL snap_column NEW_VALUE snap_time SELECT nvl(:sort_choice, 3) "SORT_COLUMN" FROM dual / SELECT nvl(:pga_choice, 'ON') "PGA_COLUMN" FROM dual / SELECT nvl(:uga_choice, 'ON') "UGA_COLUMN" FROM dual / SELECT to_char(sysdate, 'YYYYMMDD_HH24MISS') "SNAP_COLUMN" FROM dual / REM Creating new snapshot spool file SPOOL MEMORY_&snap_time REM Showing PGA statistics for each session and background process SET TERMOUT &show_pga PROMPT PROMPT :::::::::::::::::::::::::::::::::: PROGRAM GLOBAL AREA statistics ::::::::::::::::::::::::::::::::: SELECT to_char(ssn.sid, '9999') || ' - ' || nvl(ssn.username, nvl(bgp.name, 'background')) || nvl(lower(ssn.machine), ins.host_name) "SESSION", to_char(prc.spid, '999999999') "PID/THREAD", to_char((se1.value/1024)/1024, '999G999G990D00') || ' MB' " CURRENT SIZE", to_char((se2.value/1024)/1024, '999G999G990D00') || ' MB' " MAXIMUM SIZE" FROM v$sesstat se1, v$sesstat se2, v$session ssn, v$bgprocess bgp, v$process prc, v$instance ins, v$statname stat1, v$statname stat2 WHERE se1.statistic# = stat1.statistic# and stat1.name = 'session pga memory' AND se2.statistic# = stat2.statistic# and stat2.name = 'session pga memory max' AND se1.sid = ssn.sid AND se2.sid = ssn.sid AND ssn.paddr = bgp.paddr (+) AND ssn.paddr = prc.addr (+) ORDER BY &sort_order DESC / REM Showing UGA statistics for each session and background process SET TERMOUT &show_uga PROMPT PROMPT :::::::::::::::::::::::::::::::::::: USER GLOBAL AREA statistics :::::::::::::::::::::::::::::::::: SELECT to_char(ssn.sid, '9999') || ' - ' || nvl(ssn.username, nvl(bgp.name, 'background')) || nvl(lower(ssn.machine), ins.host_name) "SESSION", to_char(prc.spid, '999999999') "PID/THREAD", to_char((se1.value/1024)/1024, '999G999G990D00') || ' MB' " CURRENT SIZE", to_char((se2.value/1024)/1024, '999G999G990D00') || ' MB' " MAXIMUM SIZE" FROM v$sesstat se1, v$sesstat se2, v$session ssn, v$bgprocess bgp, v$process prc, v$instance ins, v$statname stat1, v$statname stat2 WHERE se1.statistic# = stat1.statistic# and stat1.name = 'session uga memory' AND se2.statistic# = stat2.statistic# and stat2.name = 'session uga memory max' AND se1.sid = ssn.sid AND se2.sid = ssn.sid AND ssn.paddr = bgp.paddr (+) AND ssn.paddr = prc.addr (+) ORDER BY &sort_order DESC / REM Showing sort information SET TERMOUT ON PROMPT BEGIN IF (&sort_order = 1) THEN dbms_output.put_line('Ordered by SESSION'); ELSIF (&sort_order = 2) THEN dbms_output.put_line('Ordered by PID/THREAD'); ELSIF (&sort_order = 3) THEN dbms_output.put_line('Ordered by CURRENT SIZE'); ELSIF (&sort_order = 4) THEN dbms_output.put_line('Ordered by MAXIMUM SIZE'); END IF; END; / REM Closing current snapshot spool file SPOOL OFF REM Showing the menu and getting sort order and information viewing choice PROMPT PROMPT Choose the column you want to sort: == OR == You can choose which information to see: PROMPT ... 1. Order by SESSION ... 5. PGA and UGA statistics (default) PROMPT ... 2. Order by PID/THREAD ... 6. PGA statistics only PROMPT ... 3. Order by CURRENT SIZE (default) ... 7. UGA statistics only PROMPT ... 4. Order by MAXIMUM SIZE PROMPT ACCEPT choice NUMBER PROMPT 'Enter the number of your choice or press to refresh information: ' VAR sort_choice NUMBER VAR pga_choice CHAR(3) VAR uga_choice CHAR(3) BEGIN IF (&choice = 1 OR &choice = 2 OR &choice = 3 OR &choice = 4) THEN :sort_choice := &choice; :pga_choice := '&show_pga'; :uga_choice := '&show_uga'; ELSIF (&choice = 5) THEN :sort_choice := &sort_order; :pga_choice := 'ON'; :uga_choice := 'ON'; ELSIF (&choice = 6) THEN :sort_choice := &sort_order; :pga_choice := 'ON'; :uga_choice := 'OFF'; ELSIF (&choice = 7) THEN :sort_choice := &sort_order; :pga_choice := 'OFF'; :uga_choice := 'ON'; ELSE :sort_choice := &sort_order; :pga_choice := '&show_pga'; :uga_choice := '&show_uga'; END IF; END; / REM Finishing script execution PROMPT Type "@MEMORY" and press SET FEEDBACK ON SET VERIFY ON SET SERVEROUTPUT OFF SET TRIMSPOOL OFF REM ============= REM END OF SCRIPT REM =============

Sunday, October 21, 2012

Use Oracle SOA for mobile

We have seen a trend where more and more people start using mobile applications, people start to use there phones and tablets more and more and not only for social things but for all kinds of actions. As the mobile trend is well on its way and is still picking up speed we now see that the same trend is on its way in the enterprise market. Mail on your phone is already common. Large numbers of employees do get their business mail on their phone and use it to interact while not in the office. The next step is that companies are making applications mobile so they are available to employees 24 hours a day 7 days a week on any device.

Issue is however that enterprise applications are commonly complex and often consist out of a multitude of applications and servers. Building a simple mobile application is not that easy and you have to pull information and interact with multiple applications when you are building a single application that can be used in a mobile way. Secondly, enterprise applications are commonly not equipped to be made mobile and are often "old".

Oracle is providing a couple of things that can make your life a lot easier as an architect and as a developer. If you are developing a platform and application that is intended to make enterprise applications (legacy applications) available on a mobile device you do want to look at the Oracle SOA suite and you do want to look at Oracle ADF.

Oracle SOA Suite;

The Oracle SOA suite can help you to design and build a way to interact with a multitude of backoffice systems and tie this into a single point of interaction for your mobile application platform. For example, if you need information from two different systems pulled into your mobile application, do an interaction on this data and ship it to a number of other systems it is not making sense to build all those interactions and connections into your mobile application. It would make more sense to create a communication and interaction model in your mobile application server and use the Oracle SOA suite to take care of all the interactions for you.

This will help you simplify the interaction between your mobile application and the server used to connect your mobile application to and all the possible backoffice systems you need to interact with.

Oracle ADF;

Oracle ADF (Oracle Application Development Framework) is a framework developed by Oracle to develop Java applications. If you are about to develop mobile applications and the needed background services in combination with SOA this is one of the things you do want to look into. Oracle is providing a lot of functions out of the box to interact with the Oracle SOA components as well as building mobile applications for multiple devices.

The below video is showing a quick guide into developing mobile applications with the help of Oracle ADF.

Issue is however that enterprise applications are commonly complex and often consist out of a multitude of applications and servers. Building a simple mobile application is not that easy and you have to pull information and interact with multiple applications when you are building a single application that can be used in a mobile way. Secondly, enterprise applications are commonly not equipped to be made mobile and are often "old".

Oracle is providing a couple of things that can make your life a lot easier as an architect and as a developer. If you are developing a platform and application that is intended to make enterprise applications (legacy applications) available on a mobile device you do want to look at the Oracle SOA suite and you do want to look at Oracle ADF.

Oracle SOA Suite;

The Oracle SOA suite can help you to design and build a way to interact with a multitude of backoffice systems and tie this into a single point of interaction for your mobile application platform. For example, if you need information from two different systems pulled into your mobile application, do an interaction on this data and ship it to a number of other systems it is not making sense to build all those interactions and connections into your mobile application. It would make more sense to create a communication and interaction model in your mobile application server and use the Oracle SOA suite to take care of all the interactions for you.

This will help you simplify the interaction between your mobile application and the server used to connect your mobile application to and all the possible backoffice systems you need to interact with.

Oracle ADF;

Oracle ADF (Oracle Application Development Framework) is a framework developed by Oracle to develop Java applications. If you are about to develop mobile applications and the needed background services in combination with SOA this is one of the things you do want to look into. Oracle is providing a lot of functions out of the box to interact with the Oracle SOA components as well as building mobile applications for multiple devices.

The below video is showing a quick guide into developing mobile applications with the help of Oracle ADF.

Saturday, October 20, 2012

Make SharePoint mobile

A lot of companies do use Microsoft SharePoint in one or another form and they use if to for all the good reasons. Even though I am in favour of opensource products it has to be stated that Microsoft has done a great job by developing their SharePoint solution and it ties nicely in with the MS operating systems and the domain security parts. One thing however is to be stated about SharePoint, it is not that mobile and quite often requires you to boot your laptop and make a connection via VPN to your corporate network to make full use of it.

As a mobile worker I am not always in a location, or do have the time, to boot my laptop and start and VPN connection to quickly check a document. What would be ideal is a dropbox like function that shows me files on all my devices including my mobile phone and tablet.

Now Good is providing a good way to share files and interact in a safe manner with your SharePoint installation from you mobile devices. Using the Good solution (and possible other solutions from other vendors) will help you to make your workforce even more mobile. I do think we will see more and more multi-platform solutions and tools coming to the market in the upcoming time if you keep the adoption growth of mobile devices and the new way of working in the back of your mind.

You can check out a video from the Good Share application below;

As a mobile worker I am not always in a location, or do have the time, to boot my laptop and start and VPN connection to quickly check a document. What would be ideal is a dropbox like function that shows me files on all my devices including my mobile phone and tablet.

Now Good is providing a good way to share files and interact in a safe manner with your SharePoint installation from you mobile devices. Using the Good solution (and possible other solutions from other vendors) will help you to make your workforce even more mobile. I do think we will see more and more multi-platform solutions and tools coming to the market in the upcoming time if you keep the adoption growth of mobile devices and the new way of working in the back of your mind.

You can check out a video from the Good Share application below;

Tuesday, October 16, 2012

Debug Oracle Linux with DTrace

For most Sun Solaris administrators dtrace is not having any secrets for years and it is a tool used by many to debug systems and improve code. dtrace, or in full, dynamic tracing framework, is developed by sun and has been released under CDDL license. Due to this dtrace can be found in mutliple UNIX (and UNIX like) systems, for example it has been ported to FreeBSD, NetBSD and Mac OS X and now also to Oracle Linux.

DTrace is designed to give operational insights that allow users to tune and troubleshoot applications and the OS itself.

Tracing programs (also referred to as scripts) are written using the D programming language (not to be confused with other programming languages named "D"). The language is a subset of C with added functions and variables specific to tracing. D programs resemble awk programs in structure; they consist of a list of one or more probes (instrumentation points), and each probe is associated with an action. These probes are comparable to a pointcut in aspect-oriented programming. Whenever the condition for the probe is met, the associated action is executed (the probe "fires"). A typical probe might fire when a certain file is opened, or a process is started, or a certain line of code is executed. A probe that fires may analyze the run-time situation by accessing the call stack and context variables and evaluating expressions; it can then print out or log some information, record it in a database, or modify context variables. The reading and writing of context variables allows probes to pass information to each other, allowing them to cooperatively analyze the correlation of different events.

Special consideration has been taken to make DTrace safe to use in a production environment. For example, there is minimal probe effect when tracing is underway, and no performance impact associated with any disabled probe; this is important since there are tens of thousands of DTrace probes that can be enabled. New probes can also be created dynamically.

Porting dtrace to Oracle Linux is making a lot of sense for Oracle as they now own Solaris after the merger with Sun. Having the option to build scripts in D and share them between implementations of Oracle Linux and Oracle Solaris is providing the Oracl community and Oracle support with the option to create scripts once and use them on the 2 primary operating systems produced by Oracle.

For those who are new to dtrace a good place to start is the DTrace QuickStart guide published on tablespace.net . In this guide you can see the benefits of using dtrace but also the potential security risks of dtrace when it can be used by everyone. As you can debug the operating system you can snoop information that is not intended for everyone. The example used in this guide is snooping the passwd password that is entered by a user when using passwd.

For those interested in how DTrace and the people behind porting DTrace to Oracle Linux, below is a video with Kris van Hees who has been working on the porting project within Oracle.

Video streaming by Ustream

DTrace is designed to give operational insights that allow users to tune and troubleshoot applications and the OS itself.

Tracing programs (also referred to as scripts) are written using the D programming language (not to be confused with other programming languages named "D"). The language is a subset of C with added functions and variables specific to tracing. D programs resemble awk programs in structure; they consist of a list of one or more probes (instrumentation points), and each probe is associated with an action. These probes are comparable to a pointcut in aspect-oriented programming. Whenever the condition for the probe is met, the associated action is executed (the probe "fires"). A typical probe might fire when a certain file is opened, or a process is started, or a certain line of code is executed. A probe that fires may analyze the run-time situation by accessing the call stack and context variables and evaluating expressions; it can then print out or log some information, record it in a database, or modify context variables. The reading and writing of context variables allows probes to pass information to each other, allowing them to cooperatively analyze the correlation of different events.

Special consideration has been taken to make DTrace safe to use in a production environment. For example, there is minimal probe effect when tracing is underway, and no performance impact associated with any disabled probe; this is important since there are tens of thousands of DTrace probes that can be enabled. New probes can also be created dynamically.

Porting dtrace to Oracle Linux is making a lot of sense for Oracle as they now own Solaris after the merger with Sun. Having the option to build scripts in D and share them between implementations of Oracle Linux and Oracle Solaris is providing the Oracl community and Oracle support with the option to create scripts once and use them on the 2 primary operating systems produced by Oracle.

For those who are new to dtrace a good place to start is the DTrace QuickStart guide published on tablespace.net . In this guide you can see the benefits of using dtrace but also the potential security risks of dtrace when it can be used by everyone. As you can debug the operating system you can snoop information that is not intended for everyone. The example used in this guide is snooping the passwd password that is entered by a user when using passwd.

For those interested in how DTrace and the people behind porting DTrace to Oracle Linux, below is a video with Kris van Hees who has been working on the porting project within Oracle.

Video streaming by Ustream

Sunday, October 14, 2012

The commitment of Oracle towards OpenSource

Oracle is using opensource more and more and it is in the foundation of some of there products. If you look at the engineered systems like ExaData and ExaLogic you will see that a lot of the components are opensource. When your boot an ExaData for the first time the first question that will be asked to you via the terminal is if you want to install Oracle Solaris or Oracle Linux as an operating system.

Oracle Linux is one the most visible products where Oracle is involved in opensource... you also have MySQL for example which is quite know with a lot of people however Oracle is also providing a lot of input in area's where you do not see the name Oracle that big on the products. For example brtfs is one the things Oracle is investing a lot of time in.

In the below video you can watch Edward Screven explaining about the strategy from Oracle in relation to opensource. Edwards is the chief corporate architect within Oracle and responsible for the system strategy including the strategy for OpenSource and the OpenSource projects that are run by the team of Wim Coekaerts within Oracle.

Oracle Linux is one the most visible products where Oracle is involved in opensource... you also have MySQL for example which is quite know with a lot of people however Oracle is also providing a lot of input in area's where you do not see the name Oracle that big on the products. For example brtfs is one the things Oracle is investing a lot of time in.

In the below video you can watch Edward Screven explaining about the strategy from Oracle in relation to opensource. Edwards is the chief corporate architect within Oracle and responsible for the system strategy including the strategy for OpenSource and the OpenSource projects that are run by the team of Wim Coekaerts within Oracle.

Wednesday, October 03, 2012

Oracle Data Guard long distance replication

Oracle data guard had been around for some time already enabling you to have a standby database and keep this in sync with your primary database for failover situations. One of the less know features from Oracle data Guard is the use of a Data Guard Broker. In many setups the use of Oracle Data Guard is somewhat limited due to the distances between your primary datacenter and your secondary datacenter. You are limited by the laws of physics on where your secondary datacenter can be located while keeping performance at an acceptable level. This is because if you are using SYNC synchronisation your primary database will be waiting for a commit of the write from the secondary database. The log writer on the primary database will wait for a acknowledgement from the standby database when operating in a protection or availability mode. After the acknowledgement from the standby database is received it will send the acknowledge from the commit to the client application.

Even though Oracle Data Guard is already available to databases for some time it has been improved a lot in the new Oracle Database 12C release and will be compatible with the container database setup and the pluggable database concept.

Oracle Data Guard broker is a distributed management framework which enables you to have more flexibiity when developing your primary and standby database design with Data Guard. Oracle data guard is proving you standard with 3 options; protection, availability and performance. You can set this by using a SQL command in your database.

SQL> ALTER DATABASE SET STANDBY DATABASE TO MAXIMIZE {PROTECTION | AVAILABILITY | PERFORMANCE};

All 3 options have there own use and should be considerd carefully when implemented and checked against the SLA's you have for this database, the RPO set for recovery and the performance demands for this database. The option you will have tie into the your consideration is the number of transactions per second that will be running against your database. All transactions that are not a read will have to be send to the standby database(s) which will have a load on your entire system.

To be able to take the correct decision you will have to understand what the protection, availability and performance option will do.

Maximum Protection:

Maximum Protection offers the highest level of data protection. Maximum Protection synchronously transmits redo records to the standby database(s) using SYNC redo transport. The Log Writer process on the primary database will not acknowledge the commit to the client application until Data Guard confirms that the transaction data is safely on disk on at least one standby server. Because of the synchronous nature of redo transmission, Maximum Protection can impact primary database response time. Configuring a low latency network with sufficient bandwidth for peak transaction load can minimize this impact. Financial institutions and lottery systems are two examples where this highest level of data protection has been deployed.

It is strongly recommended that Maximum Protection be configured with at least two standby databases so that if one standby destination fails, the surviving destination can still acknowledge the primary, and production can continue uninterrupted. Users who are considering this protection mode must be willing to accept that the primary database will stall (and eventually crash) if at least one standby database is unable to return an acknowledgement that redo has been received and written to disk. This behavior is required by the rules of Maximum Protection to achieve zero data loss under circumstances where there are multiple failures (e.g. the network between the primary and standby fails, and then a second event causes the primary site to fail). If this is not acceptable, and you want zero data loss protection but the primary must remain available even in circumstances where the standby cannot acknowledge data is protected, use the Maximum Availability protection mode described below.

Maximum Availability:

Maximum Availability provides the next highest level of data protection while maintaining availability of the primary database. As with Maximum Protection, redo data is synchronously transmitted to the standby database using SYNC redo transport services. Log Writer will not acknowledge that transactions have been committed by the primary database until Data Guard has confirmed that the transaction data is available on disk on at least one standby server. However, unlike Maximum Protection, primary database processing will continue if the last participating standby database becomes unavailable (e.g. network connectivity problems or standby failure). The standby database will temporarily fall behind compared to the primary database while it is in a disconnected state. When contact is re-established, Data Guard automatically resynchronizes the standby database with the primary.

Because of synchronous redo transmission, this protection mode can impact response time and throughput. Configuring a low latency network with sufficient bandwidth for peak transaction load can minimize this impact.

Maximum Availability is suitable for businesses that want the assurance of zero data loss protection, but do not want the production database to be impacted by network or standby server failures. These businesses will accept the possibility of data loss should a second failure subsequently affect the production database before the initial network or standby failure is resolved and the configuration resynchronised.

Maximum Performance:

Maximum Performance is the default protection mode. It offers slightly less data protection with the potential for higher primary database performance than Maximum Availability mode. In this mode, as the primary database processes transactions, redo data is asynchronously shipped to the standby database using ASYNC redo transport. Log Writer on the primary database does not wait for standby acknowledgement before it acknowledges the commit to the client application. This eliminates the potential overhead of the network round-trip and standby disk i/o on primary database response time. If any standby destination becomes unavailable, processing continues on the primary database and there is little or no impact on performance.

During normal operation, data loss exposure is limited to that amount of data that is in-flight between the primary and standby – an amount that is a function of the network’s capacity to handle the volume of redo data generated by the primary database. If adequate bandwidth is provided, total data loss exposure is very small or zero.

Maximum Performance should be used when availability and performance on the primary database are more important than the risk of losing a small amount of data. This mode is suitable for Data Guard deployments in cases where the latency inherent in a customer’s network is high enough to limit the suitability of synchronous redo transmission.

When you are using Data Guard Broker as a man in the middle solution you can mix and match the above mentioned solutions. This will enable you to get past some of the physical issues that you are around long distance replication. The below model shows how you could do a long distance data replication using the Oracle Data Guard Broker technology as a true distributed management framework.

In the above image you can see that Data Guard is using a SYNC connection to the Oracle Data Guard broker. The Data Guard broker is to be located at a location which is geographically close to the primary database and should be equipped with networking capabilities that are up to the task. Using the SYNC method will make that the primary database is waiting for a acknowledge from the broker. As soon as the acknowledge is received the primary database can provide a acknowledge to the client.

From this point on Oracle Data Guard broker can be configured to use a ASYNC Connection to ship the changes to the standby database. By using the ASYNC option you can achieve data synchronisation over a much longer distance. Within the setup for Oracle Database 12C specially on this point some improvements are made and will be available in 2013 in the first release 12.1 release of the new database concept.

You can place the Oracle Data Guard broker in the same datacenter as the primary database however it would make good sense if you placed it in a different datacenter. By having a SYNC connection to the Oracle Data Guard broker all your data will be in the datacenter where the Oracle Data Guard broker is and this will forward it to the standby database. So in case of an emergency all data will be transported to the standby database even in the case of a sudden total loss of the primary datacenter.

Even though Oracle Data Guard is already available to databases for some time it has been improved a lot in the new Oracle Database 12C release and will be compatible with the container database setup and the pluggable database concept.

Oracle Data Guard broker is a distributed management framework which enables you to have more flexibiity when developing your primary and standby database design with Data Guard. Oracle data guard is proving you standard with 3 options; protection, availability and performance. You can set this by using a SQL command in your database.

SQL> ALTER DATABASE SET STANDBY DATABASE TO MAXIMIZE {PROTECTION | AVAILABILITY | PERFORMANCE};

All 3 options have there own use and should be considerd carefully when implemented and checked against the SLA's you have for this database, the RPO set for recovery and the performance demands for this database. The option you will have tie into the your consideration is the number of transactions per second that will be running against your database. All transactions that are not a read will have to be send to the standby database(s) which will have a load on your entire system.

To be able to take the correct decision you will have to understand what the protection, availability and performance option will do.

Maximum Protection:

Maximum Protection offers the highest level of data protection. Maximum Protection synchronously transmits redo records to the standby database(s) using SYNC redo transport. The Log Writer process on the primary database will not acknowledge the commit to the client application until Data Guard confirms that the transaction data is safely on disk on at least one standby server. Because of the synchronous nature of redo transmission, Maximum Protection can impact primary database response time. Configuring a low latency network with sufficient bandwidth for peak transaction load can minimize this impact. Financial institutions and lottery systems are two examples where this highest level of data protection has been deployed.

It is strongly recommended that Maximum Protection be configured with at least two standby databases so that if one standby destination fails, the surviving destination can still acknowledge the primary, and production can continue uninterrupted. Users who are considering this protection mode must be willing to accept that the primary database will stall (and eventually crash) if at least one standby database is unable to return an acknowledgement that redo has been received and written to disk. This behavior is required by the rules of Maximum Protection to achieve zero data loss under circumstances where there are multiple failures (e.g. the network between the primary and standby fails, and then a second event causes the primary site to fail). If this is not acceptable, and you want zero data loss protection but the primary must remain available even in circumstances where the standby cannot acknowledge data is protected, use the Maximum Availability protection mode described below.

Maximum Availability:

Maximum Availability provides the next highest level of data protection while maintaining availability of the primary database. As with Maximum Protection, redo data is synchronously transmitted to the standby database using SYNC redo transport services. Log Writer will not acknowledge that transactions have been committed by the primary database until Data Guard has confirmed that the transaction data is available on disk on at least one standby server. However, unlike Maximum Protection, primary database processing will continue if the last participating standby database becomes unavailable (e.g. network connectivity problems or standby failure). The standby database will temporarily fall behind compared to the primary database while it is in a disconnected state. When contact is re-established, Data Guard automatically resynchronizes the standby database with the primary.

Because of synchronous redo transmission, this protection mode can impact response time and throughput. Configuring a low latency network with sufficient bandwidth for peak transaction load can minimize this impact.

Maximum Availability is suitable for businesses that want the assurance of zero data loss protection, but do not want the production database to be impacted by network or standby server failures. These businesses will accept the possibility of data loss should a second failure subsequently affect the production database before the initial network or standby failure is resolved and the configuration resynchronised.

Maximum Performance:

Maximum Performance is the default protection mode. It offers slightly less data protection with the potential for higher primary database performance than Maximum Availability mode. In this mode, as the primary database processes transactions, redo data is asynchronously shipped to the standby database using ASYNC redo transport. Log Writer on the primary database does not wait for standby acknowledgement before it acknowledges the commit to the client application. This eliminates the potential overhead of the network round-trip and standby disk i/o on primary database response time. If any standby destination becomes unavailable, processing continues on the primary database and there is little or no impact on performance.

During normal operation, data loss exposure is limited to that amount of data that is in-flight between the primary and standby – an amount that is a function of the network’s capacity to handle the volume of redo data generated by the primary database. If adequate bandwidth is provided, total data loss exposure is very small or zero.

Maximum Performance should be used when availability and performance on the primary database are more important than the risk of losing a small amount of data. This mode is suitable for Data Guard deployments in cases where the latency inherent in a customer’s network is high enough to limit the suitability of synchronous redo transmission.

When you are using Data Guard Broker as a man in the middle solution you can mix and match the above mentioned solutions. This will enable you to get past some of the physical issues that you are around long distance replication. The below model shows how you could do a long distance data replication using the Oracle Data Guard Broker technology as a true distributed management framework.

In the above image you can see that Data Guard is using a SYNC connection to the Oracle Data Guard broker. The Data Guard broker is to be located at a location which is geographically close to the primary database and should be equipped with networking capabilities that are up to the task. Using the SYNC method will make that the primary database is waiting for a acknowledge from the broker. As soon as the acknowledge is received the primary database can provide a acknowledge to the client.

From this point on Oracle Data Guard broker can be configured to use a ASYNC Connection to ship the changes to the standby database. By using the ASYNC option you can achieve data synchronisation over a much longer distance. Within the setup for Oracle Database 12C specially on this point some improvements are made and will be available in 2013 in the first release 12.1 release of the new database concept.

You can place the Oracle Data Guard broker in the same datacenter as the primary database however it would make good sense if you placed it in a different datacenter. By having a SYNC connection to the Oracle Data Guard broker all your data will be in the datacenter where the Oracle Data Guard broker is and this will forward it to the standby database. So in case of an emergency all data will be transported to the standby database even in the case of a sudden total loss of the primary datacenter.

Tuesday, October 02, 2012

Oracle database 12C introduction

During the 2012 version of the annual Oracle OpenWorld meeting in San Francisco Oracle announced a new version of the there database. The new version will be Oracle Database 12C where C stands for cloud, it will not come as a surprise that Oracle is launching a cloud version of the Database. For everyone following the releases of Oracle software it is already for some time evident that they are building a full cloud stack of their products.

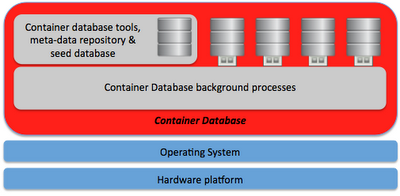

The impressing part of Oracle Database 12c is that it is based upon a complete new and fundamentally changed way of thinking about databases. Oracle Database 12C will be the first multitenant database in the market. The new concept of the 12C database is around a shared database container which will hold and orchestrate the background processes that are needed to operate the database. Within the 12C container database you can deploy multiple pluggable databases. The current limit for the number of pluggable databases deployed within a single container database will be 252 in version 12.1 however according to Penny Avril who is the senior director for oracle database product management this number will increase in upcoming releases.

The below image shows the high level architecture for the container database principle introduced in the Oracle 12C database.

Current release date is set for somewhere in 2013 and the 12.1 release is still in beta testing by a select number of clients partners so nothing can be stated yet about the exact details and the pricing and licensing of the 12C database however it is intended to have a cost saving effect for customers, cost saving will come from making the management of databases more easy and less resource intensive as well as the ability to consolidate multiple databases in one database container as pluggable databases.

The impressing part of Oracle Database 12c is that it is based upon a complete new and fundamentally changed way of thinking about databases. Oracle Database 12C will be the first multitenant database in the market. The new concept of the 12C database is around a shared database container which will hold and orchestrate the background processes that are needed to operate the database. Within the 12C container database you can deploy multiple pluggable databases. The current limit for the number of pluggable databases deployed within a single container database will be 252 in version 12.1 however according to Penny Avril who is the senior director for oracle database product management this number will increase in upcoming releases.

The below image shows the high level architecture for the container database principle introduced in the Oracle 12C database.

Current release date is set for somewhere in 2013 and the 12.1 release is still in beta testing by a select number of clients partners so nothing can be stated yet about the exact details and the pricing and licensing of the 12C database however it is intended to have a cost saving effect for customers, cost saving will come from making the management of databases more easy and less resource intensive as well as the ability to consolidate multiple databases in one database container as pluggable databases.

Subscribe to:

Posts (Atom)