I have been working the past year a lot with technology around Oracle VM and Oracle Enterprise Manager 11 and 12C as well as that I have been playing a lot with public and private cloud concepts. Some people have asked me the past months on why I have not been blogging more on Oracle VM and the other parts I have been using and working with. It will be my intention to write more about it simply because I now have the access to a second test server at home which I can use for testing the things I like to blog about. So you can expect more on this.

As a starter I would like to show you the below Oracle video where Kurt Hackel is explaining to a former VMWare employee and a current Oracle employee, Douglas Phillips, what Oracle VM is all about.

The personal view on the IT world of Johan Louwers, specially focusing on Oracle technology, Linux and UNIX technology, programming languages and all kinds of nice and cool things happening in the IT world.

Friday, January 27, 2012

Wednesday, January 25, 2012

Filemaker database and iPad development platform

Robert Scoble who blogs and video-blogs for his website building43.com is also producing a show for the rackspace.com website called "small teams big impact" which is in my opinion (just as) building43.com a great show to watch.

In this episode they talk to one of the companies from Apple which is not named apple however is named filemaker and who do provide small database solutions and a platform to create iPad applications without the need to program. Even though I do feel that you have a lot of limitations it can help small none-tech companies to start developing and running their own applications for (primary) internal use and/or external use.

Originally filemaker is developed as a cross-platform relational database where you can quickly develop applications in a drag and drop fashion. Even though some people might think filemaker is new to the market they are already up and running for some years and release version 1.0 in 1985 when they where part of Forethought Inc.

"A defining characteristic of FileMaker is that the database engine is integrated with the forms (screen, layouts, reports, etc.) used to access it. In this respect, it is closer in operation to desktop database systems such as Microsoft Access and FoxPro. In contrast, most large-scale relational database management systems (RDBMS) separate these tasks, concerning themselves primarily with organization, storage, and retrieval of the data, and providing little to no capability for user interface development. It should be noted, however, that the storage capacity of recent versions of FileMaker far exceeds most desktop database products, and indeed approaches that of many dedicated back-end systems."

In this episode they talk to one of the companies from Apple which is not named apple however is named filemaker and who do provide small database solutions and a platform to create iPad applications without the need to program. Even though I do feel that you have a lot of limitations it can help small none-tech companies to start developing and running their own applications for (primary) internal use and/or external use.

Originally filemaker is developed as a cross-platform relational database where you can quickly develop applications in a drag and drop fashion. Even though some people might think filemaker is new to the market they are already up and running for some years and release version 1.0 in 1985 when they where part of Forethought Inc.

"A defining characteristic of FileMaker is that the database engine is integrated with the forms (screen, layouts, reports, etc.) used to access it. In this respect, it is closer in operation to desktop database systems such as Microsoft Access and FoxPro. In contrast, most large-scale relational database management systems (RDBMS) separate these tasks, concerning themselves primarily with organization, storage, and retrieval of the data, and providing little to no capability for user interface development. It should be noted, however, that the storage capacity of recent versions of FileMaker far exceeds most desktop database products, and indeed approaches that of many dedicated back-end systems."

Monday, January 23, 2012

The next level of board control

Chaotic Moon already has a reputation for building great and cool things for mobile platforms and they do have some impressive names in their portfolio like NVIDIA, Discovery Channel, Sony Music, FlightAware and CBS sports. They describe themselves as:

As the world’s most proven mobile application studio, we provide everything from initial brainstorming and strategy, to custom development and publishing, to managing your entire mobile presence in any application marketplace. We stand on our history of long-term success by embracing an intense desire to reach further, faster and longer than ever before. Whether you’re a Fortune 100 company or a one-man start-up, we’ll help take your app from a concept to reality. Let us establish, design, and manage your mobile strategy. We will provide custom development on any platform to deliver you a new application or resurrect your struggling media.

Being a key innovative player in the mobile application market they now also have turned their creative heads towards something else. Boards..... The tried to sell it to the public as a "technology teaser" however we all know it serves to very important goals: (A) start a viral (B)even more important have a load of fun building it.

Chaotic Moon Labs' "Board of Awesomeness" is intended as a technology teaser to show how perceptive computing can turn around the way we look at user experiences. The project utilizes a Microsoft Kinect device, Samsung Windows 8 tablet, a motorized longboard, and some standard and custom hardware to create a longboard that watches the user to determine what to do rather than have the operator use a wired or wireless controller. The project uses video recognition, speech recognition, localization data, accelerometer data, and other factors to determine what the user wants to do and allows the board to follow the operators commands without additional aid. Video produced by Michael Gonzales at EqualSons.com

As the world’s most proven mobile application studio, we provide everything from initial brainstorming and strategy, to custom development and publishing, to managing your entire mobile presence in any application marketplace. We stand on our history of long-term success by embracing an intense desire to reach further, faster and longer than ever before. Whether you’re a Fortune 100 company or a one-man start-up, we’ll help take your app from a concept to reality. Let us establish, design, and manage your mobile strategy. We will provide custom development on any platform to deliver you a new application or resurrect your struggling media.

Being a key innovative player in the mobile application market they now also have turned their creative heads towards something else. Boards..... The tried to sell it to the public as a "technology teaser" however we all know it serves to very important goals: (A) start a viral (B)even more important have a load of fun building it.

Chaotic Moon Labs' "Board of Awesomeness" is intended as a technology teaser to show how perceptive computing can turn around the way we look at user experiences. The project utilizes a Microsoft Kinect device, Samsung Windows 8 tablet, a motorized longboard, and some standard and custom hardware to create a longboard that watches the user to determine what to do rather than have the operator use a wired or wireless controller. The project uses video recognition, speech recognition, localization data, accelerometer data, and other factors to determine what the user wants to do and allows the board to follow the operators commands without additional aid. Video produced by Michael Gonzales at EqualSons.com

Friday, January 20, 2012

Michio Kaku on CERN news

Some scientific experiments get more media coverage then others. When, some time ago, CERN was reporting that during one of their experiments it turned out that neutrinos could travel faster than light, all hell brook loose. For some people it was really strange that some scientists where upset that things could move faster than light, and then another group of people was disappointed. For all who missed some of their classes when we where discussing Einstein and his theories their is good news.

Michio Kaku is explaining why people where upset when they where thinking for a moment that some particles could potentially move faster than light. Michio Kaku is a theoretical physicist, best-selling author, and popularizer of science. He's the co-founder of string field theory (a branch of string theory).

Michio Kaku is explaining why people where upset when they where thinking for a moment that some particles could potentially move faster than light. Michio Kaku is a theoretical physicist, best-selling author, and popularizer of science. He's the co-founder of string field theory (a branch of string theory).

why OpenGraph from Facebook matters

In 2011 Facebook announced during the F8 developer conference that they would launch a new version of its interaction mechanism for developers with the Facebook platform. What they announced and and launched is the new version of the OpenGraph protocol which can be used by developers to code applications that work with Facebook and interact with Facebook.

In previous versions of OpenGraph the aim of Facebook was to introduce the "like" button to other websites and loosely integrate other websites and applications to it you will now see that with the next version of OpenGraph applications will be more and more integrated with the Facebook website. On one hand this is a great way to build applications for startup companies as Facebook will provide them a great platform and a large set of functions and options to work with information and user interactions on a known and familiar platform however the downside is that all those new companies are depending on the choices Facebook will make in the future and the success of Facebook. No Facebook and the companies depending on it will also stop existing. However looking at the things Facebook is offering and the future plans of Facebook, it is a bet however a bet that can be made quite safely.

As stated the new version of the Facebook Open Graph protocol is currently in beta and it is not yet available to all however a number of companies is currently getting live with it and will be showing it off. We can expect to see more and more application starting to use it. You can see which companies are using it at the timeline apps page on the facebook site. Just to name a couple of the applications/companies/sites available; spotify.com, pinterest.com, tripadvisor, Yahoo News, FAB.com and others.

Carl Sjogreen is director of product management, Facebook Platform. His team is building many of the new features, from Timeline to Open Graph to Ticker and he gave a interview to Robert Scoble from building43.com which you can see below:

On the same subject Robert Scoble has a talk with Mike Kerns the head of personalization at Yahoo and who is responsible for the integration of Yahoo and Facebook applications and who has been using the new Open Graph protocol to do so.

In previous versions of OpenGraph the aim of Facebook was to introduce the "like" button to other websites and loosely integrate other websites and applications to it you will now see that with the next version of OpenGraph applications will be more and more integrated with the Facebook website. On one hand this is a great way to build applications for startup companies as Facebook will provide them a great platform and a large set of functions and options to work with information and user interactions on a known and familiar platform however the downside is that all those new companies are depending on the choices Facebook will make in the future and the success of Facebook. No Facebook and the companies depending on it will also stop existing. However looking at the things Facebook is offering and the future plans of Facebook, it is a bet however a bet that can be made quite safely.

As stated the new version of the Facebook Open Graph protocol is currently in beta and it is not yet available to all however a number of companies is currently getting live with it and will be showing it off. We can expect to see more and more application starting to use it. You can see which companies are using it at the timeline apps page on the facebook site. Just to name a couple of the applications/companies/sites available; spotify.com, pinterest.com, tripadvisor, Yahoo News, FAB.com and others.

Carl Sjogreen is director of product management, Facebook Platform. His team is building many of the new features, from Timeline to Open Graph to Ticker and he gave a interview to Robert Scoble from building43.com which you can see below:

On the same subject Robert Scoble has a talk with Mike Kerns the head of personalization at Yahoo and who is responsible for the integration of Yahoo and Facebook applications and who has been using the new Open Graph protocol to do so.

Wednesday, January 18, 2012

Stop SOPA/PIPA

For all people who have been living under a rock and have been completly oblivious to the SOPA and PIPA issues that are threatening us (I just have been meeting some of them so they do exist), please do take some time to have a look at this video which quickly explains what the laws will do and why we US and none US citizens should not let this happen.

PROTECT IP / SOPA Breaks The Internet from Fight for the Future on Vimeo.

Monday, January 16, 2012

DNS BIND load-balance setup

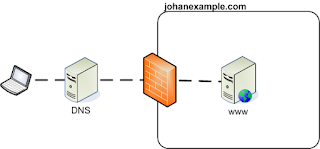

I one of my previous blogposts I stated that a method to loadbalance a cluster of (for example) webserver nodes would be using bind DNS and round robin. By using such a method your DNS server would provide you a different IP address evert time you request the IP address of the server based upon the name.

Now I do think that a lot of people like to see this page and for this reason I have build a cluster of webservers all ready to provide the content of the website www.johanexample.com to the users who want to see it. I have in total 4 webservers up and running and I named them node0, node1, node2 and node3 and the have the IP addresses 192.0.2.20, 192.0.2.21, 192.0.2.22 and 291.0.2.23

As an example I have created a domain to play with in my own home setup. I have created the domain johanexample.com and I do run a website on it which can be accessed via the www.johanexample.com. Meaning that if I try to access www.johanexample.com my laptop will request the IP address of this webserver by asking it to my DNS bind server for it. The DNS server will tell the ip address is 192.0.2.44. This is a situation which you can see in the example diagram below.

Now I do think that a lot of people like to see this page and for this reason I have build a cluster of webservers all ready to provide the content of the website www.johanexample.com to the users who want to see it. I have in total 4 webservers up and running and I named them node0, node1, node2 and node3 and the have the IP addresses 192.0.2.20, 192.0.2.21, 192.0.2.22 and 291.0.2.23

So what I would like is that the first user who wants to visit my website would be directed to node0 the second to node1, the third to node2, the fourth to node3 and fifth to node0 etc etc etc. To do this I have to setup my bind server in such a way this will start happening. So I have taken the following steps:

1) Create a zone file for the johanexample.com domain. I have created /etc/bind/zones/johanexample.com

2) Make sure the file johanexample.com is refereed to in the named.conf.local file so it will be picked up in the main configuration of bind.In my case I added:

zone "johanexample.com" {

type master;

file "/etc/bind/zones/johanexample.com";

};

3) make sure you create a complete zone file. My zone file looks like the one below. I do make more use of johanexample.com in my home network for testing so not all things in here are needed in this example:

; johanexample.com

$TTL 604800

@ IN SOA ns1.johanexample.com. root.johanexample.com. (

2012011501 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

604800); Negative Cache TTL

;

@ IN NS ns1

IN MX 10 mail

IN A 192.168.1.109

ns1 IN A 192.168.1.109

mail IN A 192.0.2.128

www IN A 192.0.2.20

IN A 192.0.2.21

IN A 192.0.2.22

IN A 192.0.2.23

node0 IN A 192.0.2.20

node1 IN A 192.0.2.21

node2 IN A 192.0.2.22

node3 IN A 192.0.2.23

What specifically is needed for the load balance part is the records which are associated with www. We use www in combination with johanexample.com and where you see in a "normal" setup only one IP address per server you now see 4 different ones:

I have also created records in the zone file for all the specific nodes so I can acces them by making use of node0.johanexample.com, node1.johanexample.com, etc etc. This is however not needed. You can do without however it can be very handy from time to time. When you do play arround with your zone files and do try some things it is good to know that next to the more known utility named-checkconf you also have a utility to check your zone file: named-checkzone. In this example the way to use it would be:

When you zone file is correct configured and you have configured multiple IP's to one name you should be able to see the loadbalancing happening when you for example do a nslookup command like in the example below:

A second check could be to do multiple ping commands and you will see the target IP change:

www IN A 192.0.2.20 IN A 192.0.2.21 IN A 192.0.2.22 IN A 192.0.2.23

I have also created records in the zone file for all the specific nodes so I can acces them by making use of node0.johanexample.com, node1.johanexample.com, etc etc. This is however not needed. You can do without however it can be very handy from time to time. When you do play arround with your zone files and do try some things it is good to know that next to the more known utility named-checkconf you also have a utility to check your zone file: named-checkzone. In this example the way to use it would be:

root@debian-bind:/# named-checkzone johanexample.com /etc/bind/zones/johanexample.com zone johanexample.com/IN: loaded serial 2012011501 OK root@debian-bind:/#

When you zone file is correct configured and you have configured multiple IP's to one name you should be able to see the loadbalancing happening when you for example do a nslookup command like in the example below:

root@debian-bind:/# nslookup www.johanexample.com Server: 192.168.1.109 Address: 192.168.1.109#53 Name: www.johanexample.com Address: 192.0.2.21 Name: www.johanexample.com Address: 192.0.2.22 Name: www.johanexample.com Address: 192.0.2.23 Name: www.johanexample.com Address: 192.0.2.20 root@debian-bind:/#

A second check could be to do multiple ping commands and you will see the target IP change:

root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.23) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.22) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.21) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.20) 56(84) bytes of data. root@debian-bind:/# ping www.johanexample.com PING www.johanexample.com (192.0.2.23) 56(84) bytes of data.

Wednesday, January 11, 2012

Oracle failed to access the USB subsystem

when you are running Oracle virtualbox at a Linux host you can have some issues when you are trying to start a virtual machine for the first time. It can give you a warning that something is wrong with the connection from the guest to the hosts USB subsystem.

Failed to access the USB subsystem.VirtualBox is not currently allowed to access USB devices. You can change this by adding your user to the 'vboxusers' group. Please see the user manual for a more detailed explanation. Result Code: NS_ERROR_FAILURE (0x00004005)

to resolve this you can quickly add your username to the group by using the usermod command or edit the file /etc/group where you resolve it by using vi. A important thing to note is that you have to logout and login again to make sure the changes are picked up correctly. Only closing virtualbox and starting it again is not enough to make sure the changes are reflected in the application.

Failed to access the USB subsystem.VirtualBox is not currently allowed to access USB devices. You can change this by adding your user to the 'vboxusers' group. Please see the user manual for a more detailed explanation. Result Code: NS_ERROR_FAILURE (0x00004005)

to resolve this you can quickly add your username to the group by using the usermod command or edit the file /etc/group where you resolve it by using vi. A important thing to note is that you have to logout and login again to make sure the changes are picked up correctly. Only closing virtualbox and starting it again is not enough to make sure the changes are reflected in the application.

Thursday, January 05, 2012

big data vision from Oracle

Big data is one of the key areas companies are currently trying to get a market share. In the field of big data it is key to be able to handle large sets of data. Companies working on big data solutions and working with large sets of data commonly are looking at NoSQL and Hadoop like solutions as well as super computing solutions. My personal favor is for the NoSQL and hadoop like solutions and not so in the field of super computing or HPC. Even though HPC solutions like Oracle ExaData and ExaLogic are a solution for some situations this is primarily a solution for large commodity enterprises style of companies.

Oracle is really willing to jump into the big data game and is not only betting on the ExaData and ExaLogic solutions, they are also trying to provide solutions by providing hadoop API’s en Oracle NoSQL. Missing the big data train would mean for Oracle losing its dominant position in the data and database market which is not an option for them. This means we will most likely see a lot more of Oracle in the Big Data market for the upcoming time and not only with (big) appliances however also with (opensource) software solutions.

In this video, Antony Wildey from Oracle Retail explains what Big Data is, and why effective management of data is vital to retailers in gaining actionable insight into how to improve their business. It includes how Oracle can help businesses to use data from social networking sites such as Facebook and Twitter, and use sentiment analysis seamlessly to provide insight on product demand.

Oracle is really willing to jump into the big data game and is not only betting on the ExaData and ExaLogic solutions, they are also trying to provide solutions by providing hadoop API’s en Oracle NoSQL. Missing the big data train would mean for Oracle losing its dominant position in the data and database market which is not an option for them. This means we will most likely see a lot more of Oracle in the Big Data market for the upcoming time and not only with (big) appliances however also with (opensource) software solutions.

In this video, Antony Wildey from Oracle Retail explains what Big Data is, and why effective management of data is vital to retailers in gaining actionable insight into how to improve their business. It includes how Oracle can help businesses to use data from social networking sites such as Facebook and Twitter, and use sentiment analysis seamlessly to provide insight on product demand.

Wednesday, January 04, 2012

smart grid solution

The way we use energy, the way we distribute it and the way we produce it will have to change in the upcoming years. we have already seen how smart metering and billing is coming into play and you see smart grids coming up. The company I am currently working for is also heavily investing in this area and it is a very exiting field to work in and to think about. Specially the smart grid options we can design in the upcoming years is a exiting field.

"A smart grid is a digitally enabled electrical grid that gathers, distributes, and acts on information about the behavior of all participants (suppliers and consumers) in order to improve the efficiency, importance, reliability, economics, and sustainability of electricity services."

A interesting talk at Google by Erich Gunther can be found below:

Presented by Erich W. Gunther.

The smart grid is a big topic these days, but before there was a smart grid newspaper headline, the utilities have been experimenting with TCP/IP in the backend networks for a while now. Erich Gunther of enernex (www.enernex.com) will present a reference model and concept of network operations for the power industry including how Internet Protocols fit in that space. Along the way he will touch on what has worked, what hasn't and some of the security issues along the way.

Erich W. Gunther is the co-founder, chairman and chief technology officer for EnerNex Corporation - an electric power research, engineering, and consulting firm - located in Knoxville Tennessee. With 30 years of experience in the electric power industry, Erich is no stranger to smart grid - he has been involved in defining what smart grid is before the term itself was coined.

"A smart grid is a digitally enabled electrical grid that gathers, distributes, and acts on information about the behavior of all participants (suppliers and consumers) in order to improve the efficiency, importance, reliability, economics, and sustainability of electricity services."

A interesting talk at Google by Erich Gunther can be found below:

Presented by Erich W. Gunther.

The smart grid is a big topic these days, but before there was a smart grid newspaper headline, the utilities have been experimenting with TCP/IP in the backend networks for a while now. Erich Gunther of enernex (www.enernex.com) will present a reference model and concept of network operations for the power industry including how Internet Protocols fit in that space. Along the way he will touch on what has worked, what hasn't and some of the security issues along the way.

Erich W. Gunther is the co-founder, chairman and chief technology officer for EnerNex Corporation - an electric power research, engineering, and consulting firm - located in Knoxville Tennessee. With 30 years of experience in the electric power industry, Erich is no stranger to smart grid - he has been involved in defining what smart grid is before the term itself was coined.

Oracle DNS-Based Load-Balancing e-Business Suite

Recently Elke Phelps and Sriram Veeraraghavan presented a talk named advanced E-business Suite Architectures" which handled quite some interesting topics. You van check a re-run of the presentation via the weblog of Steven Chan and you can download the presentation also from the oracle.com website in a PDF format.

One of the interesting subjects covered during the talk was the subject of load-balanced configuration for Oracle e-Business suite. This is a very interesting topic because it can help you in several parts of your architecture and your Enterprise IT planning. It can help you load-balance load within one datacenter, it can help you balance the load over multiple datacenters and it can help you with creating a more resilient solution. Within the presentation examples on load balance solutions where given in the form of DNS-based HTTP-layer based load-balancing. We will focus on DNS-based load-balancing, the slide from the presentation given by Sriram and Elke is shown below.

The example used in the presentation from Oracle is talking about Oracle e-Business Suite, however you can use DNS-based load-balancing technology for all kinds of applications and primarily for web-based applications. In the below example you can see a none load-balanced web-application. A user will request the IP address of the URL (somedomain.com = 101.102.103.101) where the .101 IP is the IP of web-node-0 within the DMZ in DC-0 (datacenter-0). This is quiet straight forward and how all web applications work in essence.

If you are planning for a more resilient solution then the one above, which has a number of single point of failure parts, the first thing we will have to take a look at is the number of web nodes. You can make one web node up and running and have a second standby for when the first fails. This is a fail-over solution in which you can choice for hot standby or cold standby.

A better solution might be to have both web-nodes up and running and have both of them handling load. This way the load can be spread evenly and when one of the web-nodes fails the other can handle the full load. This is shown in the below image where we have "web node 0" and "web node 1". In this situation you have to configure your DNS server to provide the IP address of "Web Node 0" or "Web node 1" when the user requests the IP address of the URL. To provide a good load-balance mechanism you will have to have a round-robin (like) algorithm to make sure the load is distributed among "Web Node 0" and "Web node 1"

"Round robin DNS is often used for balancing the load of geographically distributed Web servers. For example, a company has one domain name and three identical web sites residing on three servers with three different IP addresses. When one user accesses the home page it will be sent to the first IP address. The second user who accesses the home page will be sent to the next IP address, and the third user will be sent to the third IP address. In each case, once the IP address is given out, it goes to the end of the list. The fourth user, therefore, will be sent to the first IP address, and so forth."

In the above example we still have some possible issues. For example; what happens when "web node 0" is down? In the above example the DNS server will still think the node is up and will provide the IP address of the malfunctioning node to users whenever the round robin algorithm decides it is it's turn. Having a smart mechanism which checks the availability of the node and which removes the IP address from the DNS server so it will not be used when the node is down can be considered as a best practice. Having such a heart beat mechanism for DNS should be in your overall design.

A second single point of failure is the DNS server itself. If the DNS server is down it will not be able to provide the needed IP addresses when requested for by a user and it will not be able to balance the load over "web node 0" and "web node 1". It is common practice for most publicly used DNS servers to have a domain registered in a primary and a secondary name server. To prevent your solution from malfunctioning in the case a DNS server issue you should have at least a primary and a secondary DNS server and possibly even a tertiary DNS server. Having multiple DNS servers for a single domain name is within the design of how DNS name resolving works and is not something you should (really) worry about.

The above example is protecting you against the failure of a DNS server and the failure of one of your web nodes. It is however not protecting you against the failure of your entire datacenter. If datacenter DC-0 would be lost due to, for example a fire, your DNS servers would have nothing to point at. You do however have the solution already at hand with the round robin solution in your DNS servers. You can use a dual datacenter solution where you deploy your web nodes in both. Now the round robin DNS server will route users to both datacenters and to both web nodes. Meaning, the DNS server will provide routing to all 4 IP addresses of all 4 web nodes by making use of the round robin mechanism.

As you can see in the above diagram we have also introduced a data synchronization between DC-0 and DC-1 to make sure that the data is replicated to both datacenters. For replication of data between multiple datacenters you can deploy several mechanisms. Some are based upon a solution in the database, some in how the application is developed and some make use of block storage replication. Which one is the best for your solution is something that will depend on a large number of variables like for example; distance between your locations, type of storage, type of application, level of data integrity needed,... etc. making a decision on how this needs to be done however is something that you have to think about very carefully as it can impact the stability, availability, extendability and speed of your application.

One of the interesting subjects covered during the talk was the subject of load-balanced configuration for Oracle e-Business suite. This is a very interesting topic because it can help you in several parts of your architecture and your Enterprise IT planning. It can help you load-balance load within one datacenter, it can help you balance the load over multiple datacenters and it can help you with creating a more resilient solution. Within the presentation examples on load balance solutions where given in the form of DNS-based HTTP-layer based load-balancing. We will focus on DNS-based load-balancing, the slide from the presentation given by Sriram and Elke is shown below.

The example used in the presentation from Oracle is talking about Oracle e-Business Suite, however you can use DNS-based load-balancing technology for all kinds of applications and primarily for web-based applications. In the below example you can see a none load-balanced web-application. A user will request the IP address of the URL (somedomain.com = 101.102.103.101) where the .101 IP is the IP of web-node-0 within the DMZ in DC-0 (datacenter-0). This is quiet straight forward and how all web applications work in essence.

If you are planning for a more resilient solution then the one above, which has a number of single point of failure parts, the first thing we will have to take a look at is the number of web nodes. You can make one web node up and running and have a second standby for when the first fails. This is a fail-over solution in which you can choice for hot standby or cold standby.

A better solution might be to have both web-nodes up and running and have both of them handling load. This way the load can be spread evenly and when one of the web-nodes fails the other can handle the full load. This is shown in the below image where we have "web node 0" and "web node 1". In this situation you have to configure your DNS server to provide the IP address of "Web Node 0" or "Web node 1" when the user requests the IP address of the URL. To provide a good load-balance mechanism you will have to have a round-robin (like) algorithm to make sure the load is distributed among "Web Node 0" and "Web node 1"

"Round robin DNS is often used for balancing the load of geographically distributed Web servers. For example, a company has one domain name and three identical web sites residing on three servers with three different IP addresses. When one user accesses the home page it will be sent to the first IP address. The second user who accesses the home page will be sent to the next IP address, and the third user will be sent to the third IP address. In each case, once the IP address is given out, it goes to the end of the list. The fourth user, therefore, will be sent to the first IP address, and so forth."

In the above example we still have some possible issues. For example; what happens when "web node 0" is down? In the above example the DNS server will still think the node is up and will provide the IP address of the malfunctioning node to users whenever the round robin algorithm decides it is it's turn. Having a smart mechanism which checks the availability of the node and which removes the IP address from the DNS server so it will not be used when the node is down can be considered as a best practice. Having such a heart beat mechanism for DNS should be in your overall design.

A second single point of failure is the DNS server itself. If the DNS server is down it will not be able to provide the needed IP addresses when requested for by a user and it will not be able to balance the load over "web node 0" and "web node 1". It is common practice for most publicly used DNS servers to have a domain registered in a primary and a secondary name server. To prevent your solution from malfunctioning in the case a DNS server issue you should have at least a primary and a secondary DNS server and possibly even a tertiary DNS server. Having multiple DNS servers for a single domain name is within the design of how DNS name resolving works and is not something you should (really) worry about.

The above example is protecting you against the failure of a DNS server and the failure of one of your web nodes. It is however not protecting you against the failure of your entire datacenter. If datacenter DC-0 would be lost due to, for example a fire, your DNS servers would have nothing to point at. You do however have the solution already at hand with the round robin solution in your DNS servers. You can use a dual datacenter solution where you deploy your web nodes in both. Now the round robin DNS server will route users to both datacenters and to both web nodes. Meaning, the DNS server will provide routing to all 4 IP addresses of all 4 web nodes by making use of the round robin mechanism.

As you can see in the above diagram we have also introduced a data synchronization between DC-0 and DC-1 to make sure that the data is replicated to both datacenters. For replication of data between multiple datacenters you can deploy several mechanisms. Some are based upon a solution in the database, some in how the application is developed and some make use of block storage replication. Which one is the best for your solution is something that will depend on a large number of variables like for example; distance between your locations, type of storage, type of application, level of data integrity needed,... etc. making a decision on how this needs to be done however is something that you have to think about very carefully as it can impact the stability, availability, extendability and speed of your application.

Tuesday, January 03, 2012

The unhosted project

A 5 minute speed talk on the unhosted project. I am not a big fan of the unhosted project in its current form however I can see some good coming from the project so I would keep an eye on it.

The below speech is given by the guys form the unhosted project and is offering an insight in the project within the 5 minute time boundary set for the speed talks at the CCC 28C3 conference. One interesting thing you might want to dive into is that they state that you can use browser ID instead of Open-ID which I think might want to take a look at when you are developing web applications and security around web applications.

Web applications usually come with storage attached to it, users can not choose where their data is stored. Put plainly: You get their app, they get your data. We want to improve the web infrastructure by separating web application logic from per-user data storage: Users should be able to use web services they love but keep their life stored in one place they control -- a »home folder« for the web. At the same time, application developers shouldn't need to bother about providing data storage. We also believe that freedom on the web is not achieved by freely licensed web applications running on servers you can't control. That's why applications should be pure Javascript which runs client-side, all in the browser. It doesn't matter if free or proprietary -- everything can be inspected and verified. Technically speaking, we define a protocol stack called remoteStorage. A combination of WebFinger for discovery, either BrowserID or OAuth for authorization, CORS (Cross-Origin Resource Sharing) for cross-domain AJAX calls and GET, PUT, DELETE for synchronization. We also work on its adoption through patching apps and storage providers.

The below speech is given by the guys form the unhosted project and is offering an insight in the project within the 5 minute time boundary set for the speed talks at the CCC 28C3 conference. One interesting thing you might want to dive into is that they state that you can use browser ID instead of Open-ID which I think might want to take a look at when you are developing web applications and security around web applications.

Web applications usually come with storage attached to it, users can not choose where their data is stored. Put plainly: You get their app, they get your data. We want to improve the web infrastructure by separating web application logic from per-user data storage: Users should be able to use web services they love but keep their life stored in one place they control -- a »home folder« for the web. At the same time, application developers shouldn't need to bother about providing data storage. We also believe that freedom on the web is not achieved by freely licensed web applications running on servers you can't control. That's why applications should be pure Javascript which runs client-side, all in the browser. It doesn't matter if free or proprietary -- everything can be inspected and verified. Technically speaking, we define a protocol stack called remoteStorage. A combination of WebFinger for discovery, either BrowserID or OAuth for authorization, CORS (Cross-Origin Resource Sharing) for cross-domain AJAX calls and GET, PUT, DELETE for synchronization. We also work on its adoption through patching apps and storage providers.

Reverse Engineering USB Devices

Drew Fisher gave a speech on the CCC convention where he was talking on the subject of reverse engineering USB and how you can tap into the protocol. If you are ever interested in playing with USB and understanding the inner workings of USB this is a very good place to start. You also might want to check his website zarvox.org which holds some great information that can get you started.

The below youtube video shows you the speech Drew gave at 28C3

While USB devices often use standard device classes, some do not. This talk is about reverse engineering the protocols some of these devices use, how the underlying USB protocol gives us some help, and some interesting patterns to look for. I'll also detail the thought processes that went into reverse engineering the Kinect's audio protocol.

This talk will narrate the process of reverse engineering the Kinect audio protocol -- analyzing a set of USB logs, finding patterns, building understanding, developing hypotheses of message structure, and eventually implementing a userspace driver.

I'll also cover how the USB standard can help a reverse engineer out, some common design ideas that I've seen, and ideas for the sorts of tools that could assist in completing this kind of task more efficiently.

The below youtube video shows you the speech Drew gave at 28C3

While USB devices often use standard device classes, some do not. This talk is about reverse engineering the protocols some of these devices use, how the underlying USB protocol gives us some help, and some interesting patterns to look for. I'll also detail the thought processes that went into reverse engineering the Kinect's audio protocol.

This talk will narrate the process of reverse engineering the Kinect audio protocol -- analyzing a set of USB logs, finding patterns, building understanding, developing hypotheses of message structure, and eventually implementing a userspace driver.

I'll also cover how the USB standard can help a reverse engineer out, some common design ideas that I've seen, and ideas for the sorts of tools that could assist in completing this kind of task more efficiently.

Monday, January 02, 2012

The coming war on general computation

Cory Doctorow who you might know from his writing and his weblog craphound.com has given a great speech a Q&A session on the CCC 28C3 convention with the title "The coming war on general computation"

You can watch the recording of this via the below youtube video.

Description:

The last 20 years of Internet policy have been dominated by the copyright war, but the war turns out only to have been a skirmish. The coming century will be dominated by war against the general purpose computer, and the stakes are the freedom, fortune and privacy of the entire human race.

The problem is twofold: first, there is no known general-purpose computer that can execute all the programs we can think of except the naughty ones; second, general-purpose computers have replaced every other device in our world. There are no airplanes, only computers that fly. There are no cars, only computers we sit in. There are no hearing aids, only computers we put in our ears. There are no 3D printers, only computers that drive peripherals. There are no radios, only computers with fast ADCs and DACs and phased-array antennas. Consequently anything you do to "secure" anything with a computer in it ends up undermining the capabilities and security of every other corner of modern human society.

And general purpose computers can cause harm -- whether it's printing out AR15 components, causing mid-air collisions, or snarling traffic. So the number of parties with legitimate grievances against computers are going to continue to multiply, as will the cries to regulate PCs.

The primary regulatory impulse is to use combinations of code-signing and other "trust" mechanisms to create computers that run programs that users can't inspect or terminate, that run without users' consent or knowledge, and that run even when users don't want them to.

The upshot: a world of ubiquitous malware, where everything we do to make things better only makes it worse, where the tools of liberation become tools of oppression.

Our duty and challenge is to devise systems for mitigating the harm of general purpose computing without recourse to spyware, first to keep ourselves safe, and second to keep computers safe from the regulatory impulse.

You can watch the recording of this via the below youtube video.

Description:

The last 20 years of Internet policy have been dominated by the copyright war, but the war turns out only to have been a skirmish. The coming century will be dominated by war against the general purpose computer, and the stakes are the freedom, fortune and privacy of the entire human race.

The problem is twofold: first, there is no known general-purpose computer that can execute all the programs we can think of except the naughty ones; second, general-purpose computers have replaced every other device in our world. There are no airplanes, only computers that fly. There are no cars, only computers we sit in. There are no hearing aids, only computers we put in our ears. There are no 3D printers, only computers that drive peripherals. There are no radios, only computers with fast ADCs and DACs and phased-array antennas. Consequently anything you do to "secure" anything with a computer in it ends up undermining the capabilities and security of every other corner of modern human society.

And general purpose computers can cause harm -- whether it's printing out AR15 components, causing mid-air collisions, or snarling traffic. So the number of parties with legitimate grievances against computers are going to continue to multiply, as will the cries to regulate PCs.

The primary regulatory impulse is to use combinations of code-signing and other "trust" mechanisms to create computers that run programs that users can't inspect or terminate, that run without users' consent or knowledge, and that run even when users don't want them to.

The upshot: a world of ubiquitous malware, where everything we do to make things better only makes it worse, where the tools of liberation become tools of oppression.

Our duty and challenge is to devise systems for mitigating the harm of general purpose computing without recourse to spyware, first to keep ourselves safe, and second to keep computers safe from the regulatory impulse.

ubuntu launcher settings

Microsoft windows is already using a task-bar since windows 95 where we all found it was a revolution. At least I was thinking that and I thought it was quite a smart move coming from pure command line and 3.11 systems.

Now we are all so used to task-bars and start buttons and we see them changing in the windows versions. we also see that other operating systems have adopted the same way of working in all kind of ways. The operating systems from Apple also has some sort of task bar at the bottom of the screen where you can launch new programs from when needed. Also Linux is providing you a task-bar like function.

Under Ubuntu it is called the launcher and this is alos used as some sort of task bar and a start menu. As I am used to mostly use Linux in a quite basic form and do the rest of my day-to-day work from my macbook or corporate Windows machine I have not ahd much time to play with the settings if the launcher. However, I am reinstalling one of my main workstations and removed solaris and installed Ubunutu to use it as one of my workstations for day-to-day use.

Changing the settings for the Ubuntu Unity Launcher is quite simple, you can install a configuration tool called compizconfig-settings-manager. You can simply apt-get the tool and change all the settings you like.

Now we are all so used to task-bars and start buttons and we see them changing in the windows versions. we also see that other operating systems have adopted the same way of working in all kind of ways. The operating systems from Apple also has some sort of task bar at the bottom of the screen where you can launch new programs from when needed. Also Linux is providing you a task-bar like function.

Under Ubuntu it is called the launcher and this is alos used as some sort of task bar and a start menu. As I am used to mostly use Linux in a quite basic form and do the rest of my day-to-day work from my macbook or corporate Windows machine I have not ahd much time to play with the settings if the launcher. However, I am reinstalling one of my main workstations and removed solaris and installed Ubunutu to use it as one of my workstations for day-to-day use.

Changing the settings for the Ubuntu Unity Launcher is quite simple, you can install a configuration tool called compizconfig-settings-manager. You can simply apt-get the tool and change all the settings you like.

Subscribe to:

Posts (Atom)